*

THE NIGHT MIGRATIONS

This is the moment when you see again

the red berries of the mountain ash

and in the dark sky

the birds' night migrations.

It grieves me to think

the dead won't see them—

these things we depend on,

they disappear.

What will the soul do for solace then?

I tell myself maybe it won't need

these pleasures anymore;

maybe just not being is simply enough,

hard as that is to imagine.

~ Louise Glück, Averno

Oriana:

Great poets are always poets of what I call “difficult affirmation.” Glück herself observed that women poets in particular are expected to be “in service of the life force.” She didn’t approve of not taking notice of the darker side of life, of the tendency to dismiss sadness by the commandment to “think positive.”

Glück never shrinks away from the shadow of mortality, or the difficult complexities of romantic relationships or those of being a mother — and a daughter taking care of her aging mother. Even the responsibilities of gardening and its various failures (as well as its grace, the resurrections, the blossoms) are found in her work.

Ultimately the moments of grace is what we depend on:

. . . you see again

the red berries of the mountain ash

and in the dark sky

the birds' night migrations.

The exquisite crimson berries, the mysteries of the night migrations — this is the life-giving beauty of the world that the dead lose. There is no point trying to be logical here and point out that the dead no longer feel pain either — the poet mourns that they lose the delights of beauty. We can’t imagine not-being — as Jung observed, the human psyche can’t imagine its annihilation — but we understand loss. And the loss of the beauty of the world is the ultimate, unavoidable heartbreak.

*

Here are excerpts from an article on Louise Glück that appeared on the day of the announcement that she won the Nobel Prize in Literature.

~ Several individual poems from her great volume of 1999, “Vita Nova,” carry that book’s title, borrowed from Dante, whose own “new life” began when he first glimpsed Beatrice, his great love. In the final poem of the book, Glück imagines a comic-brutal custody negotiation over a dreamed dog. “In the splitting up dream / we were fighting over who would keep / the dog, / Blizzard”:

Blizzard,

Daddy needs you; Daddy’s heart is empty,

not because he’s leaving Mommy but because

the kind of love he wants Mommy

doesn’t have, Mommy’s

too ironic—Mommy wouldn’t do

the rhumba in the driveway. Or

is this wrong. Supposing

I’m the dog, as in

my child-self, unconsolable because

completely pre-verbal? With

anorexia! O Blizzard,

be a brave dog—this is

all material; you’ll wake up

in a different world,

you will eat again, you will grow up into a poet!

“This is all material” is both the specious consolation people offer to suffering artists and writers and, as these very lines demonstrate, the simple truth. Even anorexia, which nearly killed Glück as a young woman, can be a punch line. (Elsewhere in the poem, the dog—a granola, like the mistress who does do the rhumba—won’t touch “the hummus in his dogfood dish.”) Is it a good or a bad thing to “grow up into a poet”? (Today, it certainly seems like a good thing.) But the poem doesn’t end there; its final lines must be some of the most gorgeous sentiments ever expressed about a bitter romantic adversary:

Life is very weird, no matter how it ends,

very filled with dreams. Never

will I forget your face, your frantic human eyes

swollen with tears.

I thought my life was over and my heart was broken.

Then I moved to Cambridge.

Here I must interject a personal memory. I visited Cambridge and was stunned by the fact that around Harvard Square there were more bookstores than any other kind of store or business. Small restaurants were overflowing with plants. I heard low-volume classical music in almost every block: a Haydn trio, a Vivaldi concerto. It was an embarrassment of riches, a paradise for the educated who pursue a lifelong education for the sheer joy of it.

When my favorite creative writing teacher, Lynn Luria Sukenick, was diagnosed with terminal cancer, she sold her house in California and moved to Cambridge. For as long as she could, she took daily walks around the place where she felt she belonged.

*

And now back to the article:

~ When I was growing up in Vermont, a few mountains over from her, Glück’s work was everywhere; she still feels to me like a seventies figure, something in the key of Joni Mitchell, because of all the bookshelves and coffee tables of that era where her books were found. She was “our” poet. Now I think of all the waiters and cheesemongers and cabbies and neighborhood people in Cambridge who know her, and think of her as “their” poet. Over many long dinners, I learned from her how to listen, how to formulate a meaningful insight, how to resist the romance of easy or convenient feelings.

After attending our wedding, Louise said she’d had a great time because she “loved spectacles of good intention.” When I told her how a friend was courting his new, young wife by reading aloud poems from her book “The Wild Iris,” she laughed and said something like “that book is very useful for people who prefer to view their carnal needs in spiritual terms.” Mommy’s too ironic, but today we’re all pretty blissed out that Louise Glück grew up into a poet. ~

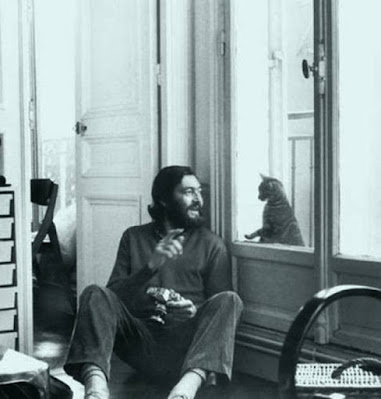

https://www.newyorker.com/books/page-turner/how-louise-gluck-nobel-laureate-became-our-poet?utm_source=nl&utm_brand=tny&utm_mailing=TNY_Daily_100820&utm_campaign=aud-dev&utm_medium=email&bxid=5c0017f72a077c6ca62cc443&cndid=55544946&hasha=e18ae510841c77329a0e2626cc03d351&hashb=c44a9eae5103d7811a007ab996c9d3f71b3b2e20&hashc=df404ca4c6a7ea7fd7c7fddd0178d54e5e4b11c249c39eb055ba3cb06818db2d&esrc=bounceX&mbid=mbid%3DCRMNYR012019&utm_content=B&utm_term=TNY_Daily Louise Glück in 1977

Louise Glück in 1977

*

ALBERT CAMUS: THE SHARED LATRINE, THE SILENCE, THE RADIANCE

~ For Camus, the lyrical sentiments were deeply rooted in the physical and human landscape of his native Algeria. They flowed from the childhood he spent in a poor neighborhood of Algiers, where he was raised by an illiterate and imperious grandmother and a deaf and mostly mute mother in a sagging two-story building, whose cockroach-infested stairwell led to a common latrine on the landing.

That latrine plays a pivotal role in his unfinished novel, The First Man (published posthumously in 1994). Wishing to keep the change he received after going to the store, Camus’s adolescent alter ego tells his grandmother that it had fallen into the latrine pit. Without a word, she rolls up a sleeve, goes to the hole, and digs for it. At that moment, Camus writes, “he understood it was not avarice that caused his grandmother to grope around in the excrement, but the terrible need that made two francs a significant amount in this home.”

Yet, like Sisyphus with his boulder, Camus claims his impoverished childhood as his own. In his preface to his first collection of essays, The Wrong Side and the Right Side (1937), Camus recalls that his family “lacked almost everything and envied practically nothing.” This is because, he explains, “poverty kept me from thinking all was well under the sun and in history.” Yet, at the same time, “the sun taught me that history was not everything.” Poverty was not a misfortune, he insists. Instead, it was “radiant with light.”

Radiance washes across the early essays, at times so fulsomely that it is hard to keep your head above the cascade of words. During an earlier visit to Tipasa, the sun-blasted pile of Roman rubble that overlooks the Mediterranean, Camus seems quite literally enthused — filled by the gods — as he goes pagan. This place, he announces, is inhabited by gods who “speak in the sun and the scent of absinthe leaves, in the silver armor of the sea, in the raw blue sky, the flower-covered ruins, and the great bubbles of light among the heaps of stone.” It is here, he declares, “I open my eyes and heart to the unbearable grandeur of this heat-soaked sky. It is not so easy to become what one is, to rediscover one’s deepest measure.”

Yes, it is surprising to think of the iconic black-and-white figure, wrapped in a trench coat and smoking a Gauloise, as the author of these words. It is more surprising, perhaps, to learn that before he wrote these words (or, for that matter, ever wore a trench coat), Camus had declaimed them while wandering with two friends through the Roman ruins. Yet this lyricism does burst through the austere prose of his novels, as when Meursault finds himself alone on the light-blasted beach with the “Arab” in The Stranger (1942) or when Rieux and Tarrou go for their nocturnal swim in The Plague (1947).

The lyricism of these essays — which run from the mid-1930s, when he was still an obscure twentysomething trying to become a writer, to the early 1950s, when he had become a celebrity who found writing a terrifying burden — reflects another trait Camus shared with Sisyphus. Like that ancient Houdini, who hated Hades with the passion of a lover of the sun, Camus hated ideologies and abstractions with the same passion, tying him fast to the one life and one world he would ever know. “There is no superhuman happiness, no eternity outside the curve of the days,” he writes in “Summer in Algiers.” All there is, he concludes, are “stones, flesh, stars, and those truths the hand can touch.”

*

Camus’s childhood was radiant with light, but also steeped in silence. Sharing the Algiers apartment was his Uncle Etienne, who spoke with difficulty and communicated mostly through hand gestures and facial expressions. His mother, Catherine Hélène Camus (née Sintès), lost most of her ability to speak when informed of the death of her husband, Camus’s father, during the First Battle of the Marne. And it is his mother who occupies the epicenter of the silence that enveloped Camus’s early life.

In one of his earliest essays, “Between Yes and No,” he writes that his mother spent her days cleaning other people’s homes and her nights thinking about nothing at her own home. She thought about nothing, he explains, because “[e]verything was there” at the apartment: her two children, her many tasks, her few pieces of furniture, her one memento of her husband (the shell splinter removed from his skull).

Her life was filled — what was there left to say? In an indelible portrait, Camus writes that, as a child, he would stare at his mother, who would “huddle in a chair, gazing in front of her, wandering off in the dizzy pursuit of a crack along the floor. As the night thickened around her, her muteness would seem irredeemably desolate.” Watching her, the boy is at first terrified by this “animal silence,” then experiences a surge of feeling that he believes must be love, “because after all she is his mother.”

This maternal silence, which soon came to assume a metaphysical presence, became the center of his work. Camus had striven his entire life, he writes in his preface to The Wrong Side and the Right Side, “to rediscover a justice or a love to match this silence.” By its refusal of words, Catherine Camus’s love for her son — like Cordelia’s for her father — was the greatest of loves. Recognizing this, though, did not fully reconcile Camus to the fundamental strangeness of his mother’s presence. What he wanted most in the world, he wrote in his notes for The First Man, was never to be had — namely, for his mother “to read everything that was his life and his being. […] His love, his only love, would forever be speechless.”

*

Invincible summers suggest indestructible hopes. But Personal Writings reminds us that, just as Camus did not do inspiration, so he also did not do hope. Contrary to coffee mug sentiments, hope is, Camus explains, the most terrible of all the evils because it is “tantamount to resignation. And to live is not to be resigned.”

This explains Camus’s paradoxical claim that while there is no reason for hope, that is never a reason to despair. As we face our era’s many crises, a glance at the volume’s shortest essay, “The Almond Trees,” might help. Writing these few pages soon after France declared war on Germany in 1939, Camus tells the reader that the first thing “is not to despair.” Instead, we must simply unite and act:

“Our task as [humans] is to find the few principles that will calm the infinite anguish of free souls. We must mend what has been torn apart, make justice imaginable again in a world so obviously unjust, give happiness a meaning once more to peoples poisoned by the misery of the century. […] [I]t is a superhuman task. But superhuman is the term for tasks we take a long time to accomplish, that’s all.” ~

~ “I want to keep my lucidity to the last, and gaze upon my death with all the fullness of my jealousy and horror” — a line that, when all is said and done, has said and done it all. (~ same article) (This reminds me that Rilke, dying of leukemia, refused morphine because he wanted to keep lucidity to the last.)

Oriana:

Nevertheless, there is inspiration and hope to be found in Camus. "We must imagine Sisyphus happy" is an unforgettable line. And his second famous novel, The Plague, is full of hope and inspiration. It's about the human capacity for solidarity and rising to heroism when a disaster calls for it. I regard The Plague as his greatest novel.

Mary:

Camus' memories of his "impoverished childhood" are striking in their denial of that impoverishment. They were poor enough that grandma would dig in the common latrine for a few lost coins, and yet the days were "radiant" filled with light. They had almost nothing, and yet "envied practically nothing." This seems a paradox but is a profound truth. The essentials are all there in the astonishment of existence itself. You have what there is, the endless radiance of light on all the surfaces of the world, the senses to apprehend its most common wonders, and even silence becomes a token of an unspoken but ever present love. These are the basics, all the rest is mere elaboration.

No impossible distracting hopes, but no despair: living is unquestioningly good, goodness always a potential for the living. And these truths are embedded in the material world itself, the stones and earth, the body and the radiance of light on things. Abstractions and ideologies cannot do it justice. We are left with the abundance of things; "stones, flesh, stars, and those truths the hand can touch."

This all strikes me as faithful to my own memories, where some would think our circumstances far from the best, and yet what is remembered is the wonderful changes of light through our rooms and seasons, the feel and weight of ordinary things, the knowledge not of having much but of having all that was necessary. I do not remember what we didn't have, only the truth of what we did, the shape and flavor of days as we lived them, that will always remain the shape and flavor of home.

Oriana:

For me my childhood remains in memory as neither happy nor unhappy, but a startling mix of both. When I think of the happy times, one feature becomes central: I didn’t have to take care of the practical details. Adults did all the coping and worrying. I was free to imbibe the magic of the Carpathians or the Baltic, the downpours and blizzards (which I especially loved), the first timid green of spring. I didn’t have to arrange transportation, housing, meals, and so on. The adults had all the power, but because of the burden of practical matters, they were also the servant class (an insight I had only in adulthood, when at times I felt particularly oppressed by being in “the servant class”).

Childhood is a reprieve from “adult responsibilities.” I know there are exceptions: some children, often the eldest daughter, get “parentified,” the freedom of their childhood stolen from them. Camus obviously had sufficient freedom — and the radiance of the Algerian sun.

*

“But what is memory if not the language of feeling, a dictionary of faces and days and smells which repeat themselves like the verbs and adjectives in a speech, sneaking in behind the thing itself, into the pure present, making us sad or teaching us vicariously.” ~ Julio Cortazar

*

ANOTHER UNIVERSE BEFORE OURS

There was an earlier universe before the Big Bang, and evidence for its existence can still be observed in black holes, a Nobel Prize-winning physicist has said.

Sir Roger Penrose made the claim after recently winning the award for breakthroughs in Einstein’s general theory of relativity and proof of the existence of black holes.

Sir Roger argues that the existence of unexplained spots of electromagnetic radiation in the sky – known as ‘Hawking Points’ – are remnants of a previous universe.

It is part of the "conformal cyclic cosmology" theory of the universe, and it is suggested that these points are the final expulsion of energy called ‘Hawking radiation’, transferred by black holes from the older universe.

Black holes are a region of space where matter has collapsed on itself, and has such a high gravitational force that not even light can escape.

Such an event may be occurring in the center of our galaxy; Reinhard Genzel and Andrea Ghez, who shared the Nobel Prize with Sir Roger, offered the most compelling evidence of a supermassive black hole in the middle of the Milky Way.

There is a possibility that the timescale for the complete evaporation of a black hole could be longer than the age of our current universe, and therefore could not be detected.

“I claim that there is observation of Hawking radiation. The Big Bang was not the beginning. There was something before the Big Bang and that something is what we will have in our future”, Sir Roger said, according to The Telegraph.

“We have a universe that expands and expands, and all mass decays away, and in this crazy theory of mine, that remote future becomes the Big Bang of another aeon.

“So our Big Bang began with something which was the remote future of a previous aeon and there would have been similar black holes evaporating away, via Hawking evaporation, and they would produce these points in the sky, that I call Hawking Points.

“We are seeing them. These points are about eight times the diameter of the Moon and are slightly warmed up regions. There is pretty good evidence for at least six of these points.”

However, many have criticized the idea and the existence of the type of radiation from these black holes is yet to be confirmed.

Moreover, if an infinitely large universe in one existence has to become an infinitely small universe in the next, it would be required that all particles lose their mass as the universe ages, a notion that has also been met with skepticism.

According to standard cosmology, the universe underwent a brief expansion or ‘inflation’ after the Big Bang, which would have removed irregularities in the structure of the universe.

In response, Sir Roger said that black holes were also dismissed as only existing in mathematics, until their existence in reality was proved.

"People were very skeptical at the time, it took a long time before black holes were accepted... their importance is, I think, only partially appreciated”, he said, as reported by the BBC.

Mary:

Why is the idea of another universe before our own, and the promise of another after the death of ours, so enchanting?? Not because there is any personal relevance for ourselves or our species — we’ll be long gone. But I think the old scenario of a universe grown increasingly cold and empty, all the great lights burnt out, entropy endlessly continuing and nothing left to look for, is hugely unappealing, a disappointment and almost an insult to our ever designing minds. But the idea of successive universes, new ones exploding out of the death of the old, appeals to us like all our stories of death and resurrection...fulfilling and satisfying and exciting...even if theoretical and beyond any possibility of our being there to see it.

Oriana:

Yes. And it seems that the ancient Hindu mythology had that understanding already thousands of years ago: this universe is only one in an everlasting cycle.

*

COULD THE SOVIET UNION HAVE SURVIVED?

~ ‘No one has suggested a convincing alternative scenario.’ ~Rodric Braithwaite, British Ambassador to the Soviet Union (1988-91) and author of Armageddon and Paranoia: the Nuclear Confrontation (Profile, 2017).

People still argue about the fall of the Roman Empire. They are not going to agree quickly on why the Soviet Union collapsed when it did. Some think it could have lasted for many years, others that the collapse was unforeseeable. Andrei Sakharov, the Soviet dissident scientist, foresaw it decades before it happened.

Victory in war took the Soviet armies to the centre of Europe, where they stayed. The Soviet Union’s seductive ideology had already given it influence across the world. But after Stalin’s death in 1953 the ideology started looking threadbare, even at home. In Eastern Europe, inside the Soviet Union itself, the subject peoples were increasingly restless for freedom. Soviet scientists were the equal of any in the world, but their country was too poor to afford both guns and butter and their skills were directed towards matching the American military machine, rather than improving the people’s welfare. It worked for a while. But in 1983 the Soviet Chief of Staff admitted that ‘We will never be able to catch up with [the Americans] in modern arms until we have an economic revolution. And the question is whether we can have an economic revolution without a political revolution’.

The Soviet leaders were not stupid. They knew something had to be done. In 1985, after three decrepit leaders died in succession, they picked Mikhail Gorbachev to run the country: young, experienced, competent and – they wrongly thought – orthodox. But Gorbachev believed that change was inescapable. He curbed the KGB, freed the press and introduced a kind of democracy. He was defeated by a conservative establishment, an intractable economy and an unsustainable imperial burden. It was the fatal moment, identified by the 19th-century French political philosopher Alexis de Tocqueville, when a decaying regime tries to reform – and disintegrates.

Russians call Gorbachev a traitor for failing to prevent the collapse by force. Foreigners dismiss him as an inadequate bungler. No one has suggested a convincing alternative scenario.

*

‘Aimed at fixing the faults in Soviet society, Gorbachev’s policies emphasized them.’ ~ James Rodgers, Author of Assignment Moscow: Reporting on Russia from Lenin to Putin (I.B. Tauris, 2020) and former BBC Moscow correspondent.

Lauded in its stirring anthem as the ‘Indestructible Union of Free Republics’, the USSR entered the 1980s as a superpower. Few foresaw then that it would collapse early in the following decade. While the ‘free republics’ part of the heroic lyric was barely believed outside – or, indeed, inside –the territory which they covered, the ‘indestructible’ part seemed much more convincing.

Yet the system was failing. Yuri Andropov, who became Soviet leader in 1982 after being head of the KGB, understood that – the secret police were always the best-informed part of Soviet society. He launched reforms to address the economic stagnation he inherited.

Andropov’s death in 1984 was followed by that of his successor, Konstantin Chernenko, the year after. The Communist elite turned then to relative youth and energy. Mikhail Gorbachev was 54. In him, the Soviet Union had a leader who believed that its creaking system could be reformed and made fit for purpose. It could not. Aimed at fixing the faults in Soviet society, Gorbachev’s policies of perestroika (‘reconstruction’) and glasnost (‘openness’) – it was a time of unprecedented press freedom, for both Russian and international journalists – ended up emphasizing them.

Attempts to crack down on the widespread drunkenness that plagued the Soviet workplace proved especially unpopular with large parts of the population. As the author of Vodka and Gorbachev, Alexander Nikishin, later asked: ‘Did he understand who he was getting into a fight with?’ The question could be applied to Gorbachev’s wider strategy. After hardliners in his own party tried – and failed – to take power in a shortlived coup in 1991, the Soviet system was finished.

The Soviet economy was not strong enough both to maintain a military system at superpower level and give its people a good standard of living. On my first trip to Moscow, as a language student in the 1980s, I bought a record of that Soviet national anthem. I paid more for the plastic bag to carry it in than for the actual record. It is a small example of the economic contradictions that meant the Soviet Union could not have survived.

*

‘In Soviet Kazakhstan, the scale of resistance took Moscow by surprise.’ ~Joanna Lillis, Author of Dark Shadows: Inside the Secret World of Kazakhstan (I.B. Tauris, 2019).

In 1986, when Kazakhs took to the streets to protest against the Soviet government in Moscow, nobody had an inkling that firing up the demonstrators in Soviet Kazakhstan was a heady cocktail of ingredients that would gather momentum around the USSR and help bring it down five years later. Disillusion with out-of-touch leaders ruling them from the distant Kremlin; disenchantment with inequality in a hypocritical communist state that professed equality for all; stirrings of national pride among the Kazakhs, who went out to protest against the Kremlin’s imperious imposition of a Russian leader from outside Kazakhstan. The scale of resistance took Moscow by surprise, an indication of how disconnected from the thinking of ordinary Soviet citizens their leaders had become.

The reformer Mikhail Gorbachev had recently come to power promising glasnost, so that his people could freely voice their opinions in a more tolerant Soviet Union. When the Kazakhs took to the streets to do that, Gorbachev sent in the security forces to quell the demos with bloodshed.

The Kazakhs’ bid to make Moscow heed their frustrations failed. But the rejection of high-handed colonial rule by other nations in the Soviet Union, who were unofficially expected to kowtow to Russian superiority while officially all the USSR’s peoples were equal, soon became a driving force in the country’s collapse. In 1989, the fall of the Berlin Wall, the disintegration of the Eastern Bloc and the humiliating Soviet withdrawal from Afghanistan after a decade of pointless warfare confirmed that the superpower was waning. In 1986, the Kazakhs had no idea all this was looming. But they were unwittingly holding up a mirror to the failings of the Soviet system, which was not fit for purpose – and could not, in those historical circumstances, survive.

*

‘If the Communist Party had retained control of the media, it could, perhaps, have survived anything’ ~Richard Millington, Senior Lecturer in German at the University of Chester.

The Soviet Union could not have survived, because by 1991 the Communist Party had lost control of the media and thus the public sphere. Key to the survival of any dictatorship is strict control of the media, which shapes public opinion and promotes tacit acceptance of a regime. Though many Soviet citizens may have claimed not to believe what was written in their newspapers, they were never aware of just how far removed from reality the reports were. When Mikhail Gorbachev ascended to power in 1985, it was his policy of glasnost that let the genie out of the bottle.

In his attempt to ‘open up’ society, Gorbachev permitted the press more freedom of expression. Some historians have viewed this move as a result of the fact that Gorbachev (born in 1931) was the first leader of the Soviet Union to have cut his political teeth in a de-Stalinized USSR. But his policy backfired. Glasnost meant that news outlets could lay bare the failings of the Soviet system and the Communist Party. Perhaps more than anything else, their reporting of the horrific accident at the Chernobyl nuclear power plant in 1986 illustrated the Party’s incompetence and shredded citizens’ belief not only in its ability to govern effectively, but also to keep them safe. In fact, in 2006, Gorbachev pinpointed Chernobyl and the resulting media fallout as the real cause of the collapse of the Soviet Union.

By 1991 the game was up for the Communist Party. Glasnost had permitted dissenting voices to be heard and political movements that had once been suppressed to gain traction and support. After a failed attempt by Communist hardliners to retake control in August of that year, the Party was banned and with it disappeared the glue that was keeping the Soviet Union together. If the Communist Party had retained control of the media, it could, perhaps, have survived anything. We need only look to the Chinese example for what can happen when a dictatorship remains in full control of the public sphere.

Bad art and dictatorship go hand in hand. This is a Soviet poster commemorating the fifth anniversary of the October revolution.

Oriana:

Control of the media is tremendously important for the survival of any dictatorship. On the other hand, the modern world makes total control of information increasingly impossible. Will China eventually have to liberalize? Will it ever acknowledge the genocidal crimes of Mao? Perhaps not within my lifetime, but perhaps . . . who knows, the tyranny could crumble faster than we think. Once I witnessed the Berlin Wall fall, I realized that empires really do fall.

Mary:

Yes, empires do fall, and I am sure the article is correct in its conclusions about the opening up of the media being essential to that fall. I also think Chernobyl was an essential part of the collapse. People were being lied to not only on a massive scale, but with lies that couldn't hold up in the face of what was happening. Not only the unpreparedness, but the refusal to protect, the willingness, even determination of the state to "bury the bodies" along with the villages that were plowed under, the utter inability of the bureaucracy to proceed logically to contain the damage and take steps to minimize it, instead continuing the old policies of denying and quashing resistance...spelled the end of that clumsy and inadequate system. The steps that were just starting to move away from the old state's habits, like Gorbachev's glasnost, only made the collapse more possible.

Oriana:

It’s much harder to control the media 100% in our era. Stalin could do it, with fewer media to control, but Putin can’t — the world is too interconnected now, and the news of political opponents being poisoned, or mysteriously falling out the window, of independent journalists being executed mafia style — that can no longer be suppressed. And Putin’s own turn to be poisoned may yet come, if history is the correct guide (though I'd much rather foreseen a "democratic" end to his corrupt regime).

Maybe I'm naive, but my personal hope for Russia is the arrival of true democracy. I know it won’t happen within our lifetime. Still, as I said, the Berlin Wall did fall, so maybe, maybe . . .

Future historians will decide to what extent Chernobyl played a part in the collapse of the Soviet Union. There were many events, each a step closer. Yeltsin’s trip to the US (sponsored by the Esalen Institute!), during which he toured a grocery market and was dazzled by the American abundance (I too remember being dazzled during my first supermarket visit — my hosts fully expected that reaction, and that was the first place they took me) — and during which he realized for the first time the enormity of the lies which were the foundation of the Soviet propaganda — was apparently crucial to Yeltsin’s deciding that the Soviet Union had to go.

*

"I've been around the ruling class all my life and I've been quite aware of their total contempt for the people of the country." ~ Gore Vidal

*

THE INTENSITY OF FAITH MAY DEPEND ON THE BELIEF IN THE DEVIL

Oriana: I was told that god chooses who will believe in him and who won’t: “Faith is a gift.” It is a gift he gives to some and not to others (who are doomed to hell). I was saddened by this, but not surprised: by that point, I knew enough bible stories to realize that god played favorites. Oddly, no group seemed as likely to possess the gift of faith as old, uneducated women. But now it strikes that it wasn't their belief in god that was deep and impervious to doubt. It was their belief in the devil.

And when you believe in the devil — when in fact you've seen the devil (my grandmother was an Auschwitz survivor) — you need the Guardian Angels, the kindly saints, and Mary, the Mother of Mercy.

In modern times the belief in the devil has dramatically diminished. John Guzlowski observed: "When I was studying Early American lit, I saw the devil talked about a lot." I think that's because when we look at the past, we see the brutal harshness of life back then: children dying young (sometimes all the children in a family died before reaching adulthood), awful diseases for which there were no remedies, a great deal of excruciating poverty, a cruel judicial system (a child could be hanged for minor theft): the prevalence of abuse of every kind, and more instances of sheer misery. Someone had to be blamed, and since it couldn't be god or the king, it was easy to assume that the world was full of demons.

Nowadays, even though the news may always sound catastrophic, on the whole we live in a much better, kinder world. Religion thrives on misery; better living conditions and more control over things in our lives mean less need for religion — at least the backward, devil-dominated kind.

As for the argument that faith is a gift given only to the few, why are those few mostly poorly educated? It’s often been argued that the greatest enemy of faith is reason, our allegedly god-given mind. And yet if god wanted someone, even an evolutionary biologist, to believe in him, that would be in the Almighty's power, wouldn't it? But I'm also familiar with the argument to the contrary — he won't interfere with a person's free will by providing evidence of his existence. Obviously anything whatever can be argued, and has been.

As for the intensity of both faith and the belief in devils, we need only think of the Middle Ages, also called The Age of Faith — and of Dante’s Inferno and Milton’s Paradise Lost. That’s probably what Wallace Stevens meant when he wrote “The death of Satan was a catastrophe for the imagination.”

True, nobody could write another Inferno or Paradise Lost anymore, but we can live with it. Also, we have war movies. I mean especially the documentaries. They take us beyond anything imagined in the Inferno.

Note the many faces all over Satan’s body; that’s seen in a lot of religious art, medieval and beyond. Satan isn't just "two-faced"; he has multiple faces, all of them presumably lying.

*

ADULTS WHO ACT LIKE BABIES

~ There’s a baby in every family. Sometimes it’s an actual baby, only a few months old, but more often it’s an idea of a baby. The siblings themselves may have all grown up; they may be children, teenagers, or adults by now. And yet "the baby" is still powerfully present in the family psyche — still screaming, still unable to take responsibility for itself, still thought of as a danger or as a delight. Sometimes one of the grown-up siblings — not necessarily the youngest — takes on the role of the baby, either fierce and furious or cute and cuddly, in order to satisfy some personal need or because they are obliged to take on the role by everyone else in the family. Sometimes a parent takes on the role — helpless, irritable, wounded, needing to be looked after, needing to be soothed or comforted.

In families and in organizations (which are like families), it’s as if we need someone to take on the role, as if the baby represents our collective chaos — our vulnerability, powerlessness, and need — as well as our potential to make sense of chaos. By allocating the role to someone else — “Why don’t you grow up? When are you going to stop behaving like a baby?" — we avoid having to acknowledge our own baby tendencies, because someone else is unwittingly expressing them for us. We can then sit back and enjoy the vicarious satisfactions of tending to a baby in distress or curtailing its destructive tendencies.

Babies are useful. The idea of a baby links us to the past, to a time when there really was a baby in our family or when behaving like a baby was commonplace. Members of the family may think of that time as a golden age to be re-discovered, or as a turbulent, unresolved time the wounds of which remain unhealed. The baby may serve to remind everyone of a time when the parents split up, when someone died, or when something important got stuck in the family’s relationships, with members now unconsciously revisiting the idea of the baby in an oblique attempt to move things on, to deal with old, unresolved anxieties. How can we help this re-created baby? How can we pacify its rage? Make it smile? Make it happier?

Young people have strongly ambivalent feelings about babies — protective of them in some ways and scornful of them in others. They feel so strongly because they’re so ambivalent about the baby in themselves, seeing in the family or organizational baby their own panic, vulnerability, chaos, need, frustration, and longing, as well as their potential to be good and to do good things.

In families, in organizations, and in political life, we create and maintain the idea of a baby — an idea much more powerful than any actual baby — because babies encapsulate our hopes and fears. When the prevailing political mood is gloomy, when the world seems impossible to understand, full of conflicts and confusion, stories about babies emerge in the press — a baby abandoned, a baby in need of a transplant, a baby dying of cancer, a baby lost and found. Our anxieties coalesce around the idea of a baby. Together we worry about how best to look after and love the baby, and how to fix its difficulties, hoping that our endeavors will make things better. Babies give our lives meaning, even as they disappoint and frighten us. ~

Oriana:

“Sometimes a parent takes on the role — helpless, irritable, wounded, needing to be looked after, needing to be soothed or comforted.” This is more frequent than we dare to admit. Sometimes the ravages of aging, especially brain dysfunction, are to blame.

Maturity means becoming less self-centered and more capable of satisfying one’s own needs, while respecting the needs and rights of others. It takes more “executive brain function,” such as emotional self-control rather than throwing tantrums. And it takes not being under overwhelming stress.

It takes stopping to think which course of action is best: screaming, or calmly presenting your case? Again, this requires good executive brain function, which can be sabotaged by all kinds of factors, including senility and alcoholism. We need to remember that a person doesn’t usually choose to “act like a baby” — there are reasons, many of them biological in nature — such as deterioration of brain function.

But sometimes, the person who, on the face of it, "refuses to grow up" and continues to create chaos rather than behave rationally and responsibly, acts this way because the people around him or her reinforce such behavior by rewarding it with special attention and attempts to please the chaos-maker. That's like giving candy or toys to a child who's just thrown a tantrum.

An infant is a natural little tyrant, but that's excusable as a survival mechanism. Parents will do anything to stop the screaming, and that too is understandable. Past infancy, however, there is a need for "tough love." We have to reward positive, mature behavior, and not "acting like a baby."

I love Mary's phrase, "terminal infantilism."

*

RETIREMENT CAN BE BAD FOR THE BRAIN

~ Ah, retirement. It’s the never-ending weekend, that well-deserved oasis of freedom and rest we reach after decades of hard work. As long as we have good health and sufficient savings, we’ll be OK, right?

Not quite. Some studies have linked retirement to poorer health and a decline in cognitive functioning — at times resulting in as much as double the rate of cognitive aging. This leaves people at a greater risk of developing various types of dementia, such as Alzheimer’s disease.

“Of course, this decline after retirement does not apply to everyone,” says gerontology researcher Ross Andel. “But it seems like enough people experience this decline shortly after retiring for us to be concerned,” he says.

“The decline in speed of processing — something that’s supposed to be the main indicator of the aging of the brain — was quite pronounced,” says Andel of his findings. Speed of processing refers to how quickly we can make sense of information we’re given. Why is it so important? “If people take longer to process information, they’re more likely to forget it; they’re also more likely to get confused,” explains Andel. He adds, “Speed of processing relies on a healthy brain network. If there’s any type of impairment, information needs to travel through alternative pathways. That slows down information; that leads to memory loss, and disorientation, and so on.”

Although scientists don’t know exactly why these kinds of impairments happen, Andel speculates that it could be related to the density of dendrites. Our nervous system is made up of neurons, and dendrites are the neuronal structures that typically receive electrical signals transmitted via the axon, the long, cable-like nerve fiber of the neuron. “Each neuron can have a lot of dendrites or just one; it depends on how active that neuron is,” Andel says. The more information travels through the synapse, which forms between the axon of one neuron and the dendrite of another, “the more synapses are created. They’re created by growing the number of dendrites,” he adds.

Why might retirement lead to changes in our brain circuitry? One possibility raised by Andel: If we’re not using our brains in the same way we did when we were working, “a lot of these connections become dormant … [and] those dendrites will recede.” It’s the old “use it or lose it” hypothesis.

Based on his own research and others’, Andel hypothesizes that we might be particularly susceptible to cognitive decline when “there’s lack of an activity that will replace [our] occupation.” As he notes, people who volunteer appear to experience less cognitive decline than those who don’t. “We don’t know whether it’s the intellectual stimulation from volunteering or whether it’s simply the routine. I think it’s more about routine and individual sense of purpose,” he says.

Routine may sound tiresome, but it could potentially be what we need in retirement. Andel says, “Circadian rhythm is maintained much better when we have certain tasks that we perform regularly, like getting up at a certain time, going to do something … and then going to bed at a certain time.” This isn’t always easy, especially when you don’t have the direct incentive that comes from paid employment. “It takes a lot of intrinsic motivation,” he says.

Andel’s suggestion to anyone contemplating retirement: “Find a new routine that’s meaningful.” He points to people living in the Blue Zones, regions of the world that have been identified to be home to a greater number of residents who’ve reached the age of 100 and beyond. One of the common characteristics among Blue Zone inhabitants is, says Andel, “these people all have purpose.”

As Andel puts it, purpose is about “investing yourself into something that has meaning. It might not have meaning to others but maybe it has meaning to you.”

Instead of thinking of retirement as a permanent holiday, it might be more helpful to perceive it as a time of personal renaissance. We could see our post-work life as a “wonderful opportunity to reinvest in things that truly matter to us. We can take on that hobby we always wanted to have,” Andel says. “We can re-engage with our family or friends, maybe in a brand-new or more complete way.”

Research into retirement and cognitive decline is still in its preliminary stages, cautions Andel. He says, “We don’t know exactly what it is about retirement; we just don’t understand it well enough.” Andel himself plans to keep studying the subject and understanding its effects — he has no plans yet to retire. ~

https://getpocket.com/explore/item/think-retirement-is-smooth-sailing-a-look-at-its-potential-effects-on-the-brain?utm_source=pocket-newtab

Oriana:

Unless people have developed meaningful activities outside of their job, losing that job can be devastating. I've also seen resurrection after the initial crisis that tends to follow retirement: the discovery of volunteer activities and/or creative workshops, moving to where the grandchildren are— whatever works. Meaningful leisure may be a greater challenge than work.

Creative people don't retire from their creative work, though its nature may change. They are used to working very hard, to giving their best. For them, retiring from money-making work may be the dreamed-of liberation. For many others, however, retirement is a kind of death-in-life. I have met some older people with nothing to do except watch TV and eat snacks — dementia isn’t far away. But sometimes (probably more often), it’s depression. Old people with tragic faces — it’s a devastating sight.

And then there are people who’d like to retire, but can’t afford to. That’s a separate sadness. But again, unless there are satisfying activities to take the place of the last job, or at least the will and capacity to discover and engage in such activities, retirement can quickly translate into diminished brain function and premature death.

Japan is known for having the longest life expectancy in the world. While diet may have something to do with it — seafood, seaweed, miso, natto (an exceptionally rich source of Vitamin K2, which is emerging as the most important anti-aging supplement) — the Japanese believe in the importance of what they call “ikigai.” It means "a reason for being.” Wiki: ~ The word refers to having a direction or purpose in life, that which makes one's life worthwhile, and towards which an individual takes spontaneous and willing actions giving them satisfaction and a sense of meaning to life. ~

Mary:

It happens only too often that people retire and then, with the loss of routine, the end of the work day structure, they lose all sense of purpose, their physical and psychological health declines, their mental faculties diminish, and they may even rapidly decline and die.

To avoid this it seems you must deliberately develop a way to restructure your time and energy to restore purpose and meaning to days otherwise all too empty. It doesn't much matter how you do this, but it must be more than simply filling time. It must be time filled with meaningful activity of some kind, something that sparks interest, presents a challenge, absorbs and demands attention…feels worthwhile.

Engaged and absorbed, people find not only renewed purpose, but more energy, better health, and a sense of happiness. Sometimes I think we should have classes or some sort of instruction on what to do with retirement, besides the "endless vacation, " which always will lose its appeal after the novelty wears off.

Oriana:

Or endless touring and traveling — which depends on having reasonably good health, since travel is unavoidably stressful, and past a certain age, the stress may be greater than the pleasure. I don’t want to go into the health problems that are pretty much inevitable the older we get — it’s only a matter of time.

Creative workers never really retire — they may even strike out in new direction!

We need to radically re-define retirement not as meaningless leisure but as a new kind of meaningful work. Sometimes the work is the same as before — think of the legion of university professors who choose to teach, at least part-time, even in their late seventies — or editors or scientists or therapists or even MDs who work until the end of their ability to do so. But in many cases another door opens — volunteer activities, and/or arts and crafts. Our need for meaning never ends.

*

THE 80/20 RULE IN COVID INFECTIONS

~ There are COVID-19 incidents in which a single person likely infected 80 percent or more of the people in the room in just a few hours. But, at other times, COVID-19 can be surprisingly much less contagious. Overdispersion and super-spreading of this virus are found in research across the globe.

A growing number of studies estimate that a majority of infected people may not infect a single other person. A recent paper found that in Hong Kong, which had extensive testing and contact tracing, about 19 percent of cases were responsible for 80 percent of transmission, while 69 percent of cases did not infect another person. This finding is not rare: Multiple studies from the beginning have suggested that as few as 10 to 20 percent of infected people may be responsible for as much as 80 to 90 percent of transmission, and that many people barely transmit it.

Using genomic analysis, researchers in New Zealand looked at more than half the confirmed cases in the country and found a staggering 277 separate introductions in the early months, but also that only 19 percent of introductions led to more than one additional case. A recent review shows that this may even be true in congregate living spaces, such as nursing homes, and that multiple introductions may be necessary before an outbreak takes off. Meanwhile, in Daegu, South Korea, just one woman, dubbed Patient 31, generated more than 5,000 known cases in a megachurch cluster.

Unsurprisingly, SARS-CoV, the previous incarnation of SARS-CoV-2 that caused the 2003 SARS outbreak, was also overdispersed in this way: The majority of infected people did not transmit it, but a few super-spreading events caused most of the outbreaks. MERS, another coronavirus cousin of SARS, also appears overdispersed, but luckily, it does not—yet—transmit well among humans.

This kind of behavior, alternating between being super infectious and fairly noninfectious, is exactly what k captures, and what focusing solely on R hides. Samuel Scarpino, an assistant professor of epidemiology and complex systems at Northeastern, told me that this has been a huge challenge, especially for health authorities in Western societies, where the pandemic playbook was geared toward the flu—and not without reason, because pandemic flu is a genuine threat. However, influenza does not have the same level of clustering behavior.

Nature and society are replete with such imbalanced phenomena, some of which are said to work according to the Pareto principle, named after the sociologist Vilfredo Pareto. Pareto’s insight is sometimes called the 80/20 principle—80 percent of outcomes of interest are caused by 20 percent of inputs—though the numbers don’t have to be that strict. Rather, the Pareto principle means that a small number of events or people are responsible for the majority of consequences.

This will come as no surprise to anyone who has worked in the service sector, for example, where a small group of problem customers can create almost all the extra work. In cases like those, booting just those customers from the business or giving them a hefty discount may solve the problem, but if the complaints are evenly distributed, different strategies will be necessary. Similarly, focusing on the R alone, or using a flu-pandemic playbook, won’t necessarily work well for an overdispersed pandemic.

Hitoshi Oshitani, a member of the National COVID-19 Cluster Taskforce at Japan’s Ministry of Health, Labour and Welfare and a professor at Tohoku University who told me that Japan focused on the overdispersion impact from early on, likens his country’s approach to looking at a forest and trying to find the clusters, not the trees. Meanwhile, he believes, the Western world was getting distracted by the trees, and got lost among them. To fight a super-spreading disease effectively, policy makers need to figure out why super-spreading happens, and they need to understand how it affects everything, including our contact-tracing methods and our testing regimes.

In study after study, we see that super-spreading clusters of COVID-19 almost overwhelmingly occur in poorly ventilated, indoor environments where many people congregate over time—weddings, churches, choirs, gyms, funerals, restaurants, and such—especially when there is loud talking or singing without masks. For super-spreading events to occur, multiple things have to be happening at the same time, and the risk is not equal in every setting and activity, Muge Cevik, a clinical lecturer in infectious diseases and medical virology at the University of St. Andrews and a co-author of a recent extensive review of transmission conditions for COVID-19, told me.

Cevik identifies “prolonged contact, poor ventilation, [a] highly infectious person, [and] crowding” as the key elements for a super-spreader event. Super-spreading can also occur indoors beyond the six-feet guideline, because SARS-CoV-2, the pathogen causing COVID-19, can travel through the air and accumulate, especially if ventilation is poor. Given that some people infect others before they show symptoms, or when they have very mild or even no symptoms, it’s not always possible to know if we are highly infectious ourselves.

We don’t even know if there are more factors yet to be discovered that influence super-spreading. But we don’t need to know all the sufficient factors that go into a super-spreading event to avoid what seems to be a necessary condition most of the time: many people, especially in a poorly ventilated indoor setting, and especially not wearing masks. As Natalie Dean, a biostatistician at the University of Florida, told me, given the huge numbers associated with these clusters, targeting them would be very effective in getting our transmission numbers down.

Because of overdispersion, most people will have been infected by someone who also infected other people, because only a small percentage of people infect many at a time, whereas most infect zero or maybe one person. As Adam Kucharski, an epidemiologist and the author of the book The Rules of Contagion, explained to me, if we can use retrospective contact tracing to find the person who infected our patient, and then trace the forward contacts of the infecting person, we are generally going to find a lot more cases compared with forward-tracing contacts of the infected patient, which will merely identify potential exposures, many of which will not happen anyway, because most transmission chains die out on their own.

Overdispersion makes it harder for us to absorb lessons from the world, because it interferes with how we ordinarily think about cause and effect. For example, it means that events that result in spreading and non-spreading of the virus are asymmetric in their ability to inform us. Take the highly publicized case in Springfield, Missouri, in which two infected hairstylists, both of whom wore masks, continued to work with clients while symptomatic. It turns out that no apparent infections were found among the 139 exposed clients (67 were directly tested; the rest did not report getting sick). While there is a lot of evidence that masks are crucial in dampening transmission, that event alone wouldn’t tell us if masks work. In contrast, studying transmission, the rarer event, can be quite informative. Had those two hairstylists transmitted the virus to large numbers of people despite everyone wearing masks, it would be important evidence that, perhaps, masks aren’t useful in preventing super-spreading.

Once we recognize super-spreading as a key lever, countries that look as if they were too relaxed in some aspects appear very different, and our usual polarized debates about the pandemic are scrambled, too. Take Sweden, an alleged example of the great success or the terrible failure of herd immunity without lockdowns, depending on whom you ask. In reality, although Sweden joins many other countries in failing to protect elderly populations in congregate-living facilities, its measures that target super-spreading have been stricter than many other European countries. Although it did not have a complete lockdown, as Kucharski pointed out to me, Sweden imposed a 50-person limit on indoor gatherings in March, and did not remove the cap even as many other European countries eased such restrictions after beating back the first wave. (Many are once again restricting gathering sizes after seeing a resurgence.)

Plus, the country has a small household size and fewer multigenerational households compared with most of Europe, which further limits transmission and cluster possibilities. It kept schools fully open without distancing or masks, but only for children under 16, who are unlikely to be super-spreaders of this disease. Both transmission and illness risks go up with age, and Sweden went all online for higher-risk high-school and university students—the opposite of what we did in the United States. It also encouraged social-distancing, and closed down indoor places that failed to observe the rules. From an overdispersion and super-spreading point of view, Sweden would not necessarily be classified as among the most lax countries, but nor is it the most strict. It simply doesn’t deserve this oversize place in our debates assessing different strategies.

Perhaps one of the most interesting cases has been Japan, a country with middling luck that got hit early on and followed what appeared to be an unconventional model, not deploying mass testing and never fully shutting down. By the end of March, influential economists were publishing reports with dire warnings, predicting overloads in the hospital system and huge spikes in deaths. The predicted catastrophe never came to be, however, and although the country faced some future waves, there was never a large spike in deaths despite its aging population, uninterrupted use of mass transportation, dense cities, and lack of a formal lockdown.

Oshitani told me that in Japan, they had noticed the overdispersion characteristics of COVID-19 as early as February, and thus created a strategy focusing mostly on cluster-busting, which tries to prevent one cluster from igniting another. Oshitani said he believes that “the chain of transmission cannot be sustained without a chain of clusters or a megacluster.” Japan thus carried out a cluster-busting approach, including undertaking aggressive backward tracing to uncover clusters. Japan also focused on ventilation, counseling its population to avoid places where the three C’s come together—crowds in closed spaces in close contact, especially if there’s talking or singing—bringing together the science of overdispersion with the recognition of airborne aerosol transmission, as well as presymptomatic and asymptomatic transmission.

Oshitani contrasts the Japanese strategy, nailing almost every important feature of the pandemic early on, with the Western response, trying to eliminate the disease “one by one” when that’s not necessarily the main way it spreads. Indeed, Japan got its cases down, but kept up its vigilance: When the government started noticing an uptick in community cases, it initiated a state of emergency in April and tried hard to incentivize the kinds of businesses that could lead to super-spreading events, such as theaters, music venues, and sports stadiums, to close down temporarily. Now schools are back in session in person, and even stadiums are open—but without chanting.

Countries that have ignored super-spreading have risked getting the worst of both worlds: burdensome restrictions that fail to achieve substantial mitigation. The U.K.’s recent decision to limit outdoor gatherings to six people while allowing pubs and bars to remain open is just one of many such examples.”

https://www.theatlantic.com/health/archive/2020/09/k-overlooked-variable-driving-pandemic/616548/

Oriana:

Overdispersion and super-spreading: a small minority of people infect many others, while the majority of covid patients may infect one or none.

“Nature and society are replete with such imbalanced phenomena, some of which are said to work according to the Pareto principle, named after the sociologist Vilfredo Pareto. Pareto’s insight is sometimes called the 80/20 principle—80 percent of outcomes of interest are caused by 20 percent of inputs—though the numbers don’t have to be that strict. Rather, the Pareto principle means that a small number of events or people are responsible for the majority of consequences.”

(Go into any corporate office: you are likely to see that 20 percent of the people do 80% of the work.)

The author of the article is begging, almost desperately: drop the one-by-one approach, look at the clusters. Try to bust the clusters.

Simply the comparison of Northern Italy with the rest of Italy shows the very uneven distribution of covid — it clustered in the north, especially Lombardy.

“Using a flu-pandemic playbook won’t necessarily work well for an overdispersed pandemic.”

(And what about the non-catastrophe in Africa? Western scientists predicted an covid apocalypse there. It never happened. The main reason may be the young median age of the population, and also partial acquired immunity due to the population's previous exposure to less dangerous corona viruses. As for the argument that covid in Africa doesn’t get properly diagnosed — if this happened to be the case, we would still see a sharp rise in mortality, and that didn’t occur.)

(Sadly, the number of covid deaths in the US is now approaching 220,000.)

*

THE COMPLEXITIES OF LEFT-HANDEDNESS

Steak knives, scissors, writing desks, and power tools: If you bring any of these things up around someone and they cringe, they either have a bizarre story to tell or they’re just left-handed, and, like 15 percent of the population, live in a world that’s not designed for them.

[Oriana: other sources state the prevalence of left-handed is 10 - 12%]

But handedness goes deeper than how you like to hold a pen. A dive into the genetics of handedness reveals that lefties have quite a few other things in common, as well. But before that, it’s important to understand where handedness comes from.

Where Does Handedness Come From?

It used to be thought that lefties came to be as a result of a mother being stressed during pregnancy, but a more scientifically valid explanation has traced handedness to specific genes. Geneticists debated for years over whether the genes responsible for handedness were spread throughout our DNA or whether it was just one gene; there seemed to be no clear pattern.

Now, it seems to be accepted that one gene does most of the work.

But even so, it’s still difficult to predict whether a child will be right or left-handed. Because we receive genetic information from both parents, there’s a chance it could go either way, even if mom and pop are both lefties themselves.

People who have one variant of the gene in question will always be born right-handed. The other, less common variant is left up to chance, so babies with the “random” gene will still have a 50-50 chance of being a righty anyway. This is why most people end up right-handed and some sets of identical twins have opposite dominant hands.

It seems like that handedness gene, or at least others that are closely associated with it, are responsible for quite a lot. For example, lefties have been shown to have higher rates of diseases, like inflammatory bowel disease or Crohn’s disease.

Most studies on handedness and potential health risks only show that there’s a link between the two, not any direct cause-and-effect relationship. But even if the exact cause of these diseases remains unknown, you definitely can’t blame it on the struggle with right-handed silverware.

What Does a Lefty’s Brain Look Like?

Many of the notable differences between the brains of righties and lefties remain undiscovered, as lefties are often left out of neuroscientific research, because those exact differences can come across as noise in a given study’s data. Despite a 2014 plea to start studying lefties more extensively, little progress has been made. Meanwhile, we know all about how to create mutant left-handed plants. How’s that for scientific priorities?

But we do know some things. Notably, the brains of lefties tend to use broader swaths of the cortex for different tasks. While righties use just one of their brain’s hemispheres to remember specific events or process language, these brain functions are spread out across both hemispheres in lefties.

This may make left-handed people more resilient to strokes or other conditions that damage specific brain regions. If a righty has the small part of their brain responsible for language taken out by a stroke, then that’s that. But because lefties use more of their brain for these tasks, it’s like they have a built-in backup. This widespread brain activation may make lefties more susceptible to conditions like ADHD and schizophrenia, in which unregulated, heightened activity across multiple regions of the brain causes problems.

That’s still a big improvement from previous theories that left-handed people had shorter lifespans linked to increased emotional problems during childhood. But these theories have never found a foothold, getting debunked time and again.

It’s Not Always About Biology

Some of the differences between righties and lefties are more sociological than biological. For example, lefties are commonly cited as better fighters. Scientific attempts to correlate left-handedness with a violent predisposition have always fallen short, and it seems like the advantage comes from an element of surprise since lefties are a perpetual minority. This is why lefties are commonly seen as advantaged in head-to-head or combat sports like boxing, tennis, and fencing.

While the science trickles in, it’s important to remember that many of these neurological differences between righties and lefties are minimal, and for the most part you won’t see too much of a difference between people, unless you count some awkwardness when you go for a handshake, or maybe a bumped elbow or two at the dinner table.

https://getpocket.com/explore/item/the-unique-science-of-left-handedness?utm_source=pocket-newtab

Charles:

Left-handed baseball players are also some of the best and it definitely pays to be a left-handed baseball player. Arguably the greatest pitcher, Don Drysdale (who happened to be Jewish) and Babe Ruth, arguably the greatest home run hitter of all time, were both southpaws.

from another source:

Despite popular notions to the contrary, left-handed people do not think in the right hemisphere of the brain, nor do right-handers think in the left hemisphere. The motor cortex, that part of each hemisphere cross-wired to control the other side of the body, is only one relatively minor aspect of this dizzyingly complex organ, and it says nothing or nearly nothing about a person’s thoughts or personality.

Nearly 99 percent of right-handers have language located in the left hemisphere, and about 70 percent of lefties do. A different proportion, yes, but hardly the opposite; most lefty brains are like righty brains, at least as far as speech function is concerned. The rest either have language in the right hemisphere, or have it distributed more evenly between the two sides of the brain.

Though Broca himself had gently dismissed the link between handedness and right-hemispheric speech dominance, he hadn’t gone out of his way to assert the dissociation, so the myth that lefties had their speech located in the right hemisphere persisted for nearly half a century. It wasn’t until WWI, when physicians began to notice that injured veterans who were lefties didn’t necessarily have right-hemispheric language localization, that the myth began to erode and the quest for new theories gained momentum.

*

In 1972, Marian Annett published a paper titled, “The Distribution of Manual Asymmetry,” which, although of little notice at the time, would later serve as the foundation for one of the most widely accepted explanations of human handedness. She called it the Right Shift Theory, and she later expanded it in a 1985 volume of the same name. Annett argues that whereas human handedness is comparable to the left- or right-side preferences exhibited by other creatures with hands, paws, feet, or what have you, the approximate 90 percent predominance of right-handedness in the human population sets us apart. All other animals have a 50-50 split between righties and lefties. According to Annett’s model, handedness in nature rests on a continuum, ranging from strong left, through mixed, and then to strong right-handedness. But for humanity the distribution of preference and performance is dramatically shifted to the right. Human bias to the right, Annett explains, was triggered by a shift to the left hemisphere of the brain for certain cognitive functions, most likely speech. . . . That momentous shift was caused by a gene.

Of course, that question had perplexed generations of scientists since Darwin — who, by the way, was a victim of the confounding heredity of handedness: his wife and father-in-law were lefties, but only two of Darwin and Emma’s ten children were. But Annett’s Right Shift Theory was the first systematic explanation for the genetics of handedness.

Wolman points to Kim Peek, the autistic “megasavant” on whom the film Rain Man is based, and perhaps most notably Albert Einstein, celebrated as “the quintessential modern genius”:

Examination of his brain after death showed unusual anatomical symmetry that … can mean above-normal interhemispheric connections. Then there’s the fact that Einstein’s genius is often linked with an imagination supercharged with imagery, a highly right hemisphere-dependent function. It was that kind of imagination that ignited questions leading eventually to the Theory of Relativity: what does a person on a moving train see compared with what a person standing still sees, and how would the body age if traveling near the speed of light in a spaceship compared to the aging process observed on Earth? Is it such a stretch to speculate that Einstein landed on the fortunate end of the same brain organization spectrum upon which other, less lucky individuals land in the mental illness category? And what if handedness too is influenced by this organizational crapshoot?

Einstein’s handedness is somewhat a matter of debate. While he is often cited among history’s famous lefties, laterality scholars have surmised that he was mixed-handed — which is not to be confused with ambidextrous: mixed-handed people use the right hand for some things and the left for others, whereas the ambidextrous can use both hands equally well for most tasks.

https://getpocket.com/explore/item/the-evolutionary-mystery-of-left-handedness?utm_source=pocket-newtab

*

ending on beauty:

And God said to me: Write.

Leave the cruelty to kings.

Without that angel barring the way to love,

there would be no bridge for me

into time.

~ Rainer Maria Rilke

Creation and Expulsion from Paradise; Giovanni di Paolo, 1445

No comments:

Post a Comment