*

AGAMEMNON’S MASK

Winter nights fluoresced,

car windows fogging up

until we were closed off

in a white-gray shell,

our faces pale, our hungry

hands and mouths; the city

far-off, jeweled, Babylonian.

Your projected suicide note:

Because.

The myth of your virginity

ticked off one by one

on your marble fingers.

Your wish to have been

a Royal Air Force pilot

killed in World War II.

And yet — that lovely, awkward

moment when you first

took off your glasses.

The time we inexplicably started

dancing together without music,

a slow small-stepped tango.

*

The last time I saw you, you slept

when I left. I stroked your hair.

You dreamed it would fall around

the gold on a dead hero’s face,

like Agamemnon’s mask.

Everyone thinks he is different.

I stepped out into the common dark.

~ Oriana

One of the things I’ve learned many years ago, when I was grappling with many more problems than now (youth is so overrated) is that a lot of problems stem from thinking, “I am separate, different, and superior.” In fact we share a lot more with others than we think, including the types of psychological messes we get into.

*

JOHN LE CARRÉ’S WORK REMAINS ESSENTIAL

The Spy Who Came in from the Cold, Le Carré’s third novel, is a short book comprised of short chapters. Its action begins and ends in frozen darkness at the Berlin Wall. The barrier not only separates two empires and ideologies, but cleaves the psyches of the characters themselves. They pass through checkpoints on forged papers, while guards watch from the towers with guns drawn. Anyone who doesn’t wear a uniform speaks in a whisper. The dialogue is terse, the pace relentless. Double-crossers betray double-crossers, conspiracies ripen until vaster conspiracies subsume them, and the bonds of love and friendship drag the central and most admirable characters into disaster.

Le Carré evokes the curdled atmosphere of 1960s Berlin and London so convincingly—justified paranoia, tawdry nightlife, terrible weather—that you emerge from his pages longing for clear skies and a glass of freshly-squeezed orange juice. The book is a spy novel in same way that 2666 is a noir or Pride and Prejudice a romance. It demonstrates once again that there is no enduring divide between genre and literary fiction, or higher and lower literary forms. There are only good novels and weaker ones, in any tradition. And when you encounter a great book, you hold it close.

Le Carré’s most enduring character, the owlish and unfailingly polite intelligence officer George Smiley, plays only a minor role here. He comes to the fore in the other Cold War novels. His appearance and mannerisms remain constant over the decades, while historical events—the Prague Spring, the Vietnam War—sweep on. He hardly ages, wears expensive but ill-fitting clothes, wipes his glasses with his tie, and, during his periodic retirements from government service, studies Seventeenth-Century German poets. His wife, who occupies a higher social station, conducts notorious affairs, but Smiley’s affection for her persists.

In le Carré’s second novel, A Murder of Quality, Smiley plays the gentleman detective, investigating a boarding-school murder with the courage and tact of a latter-day Father Brown. He seems immune to the class prejudice that infects the teachers and administrators who run the place, acts decisively, and emerges from the pages unstained by the sordid events he uncovers. But his character takes on a more troubled cast in the espionage novels proper. You begin to wonder whether his personal restraint conceals a ruthless streak.

In The Looking Glass War, the author’s fourth book, the Circus (le Carré’s version of MI6, Britain’s foreign intelligence agency) dispatches Smiley to West Germany to clean up another outfit’s botched operation. A military intelligence unit has lost an operative over the border. Extricating him is impossible, or would at least be embarrassing to the British government. Smiley orders the staffers to pack up the equipment, leave the farmhouse they’ve commandeered, and abandon their man to the East German police. The agent will face interrogation, trial, and a lonely execution in a foreign land, but at least, on Smiley’s side, men of rank have sidestepped a public scandal.

The pattern recurs. Agents die in the field; bureaucrats toast their memory over glasses of port. You’re always happy when Smiley shows up in the books—you love the contrast between his shambling appearance and the precision of his intellect, and appreciate his mastery of the tools of his trade (dead drops, coded messages, considered flattery)—but you can’t ignore that he’s up to his neck in a dirty business. After all, every spy traffics in manipulation and deceit. Smiley never acts for personal gain (though his skills do earn him the directorship of the Circus, by the time of 1977’s The Honourable Schoolboy). He is concerned only with the interests of the nation and bureaucracy to which he belongs. Still, what are those interests? Who, ultimately, does Smiley serve?

Rarely does the reader sense that the Circus’s operations will tip the balance of power: the agency is an enfeebled auxiliary to the CIA; Britain, a client (at best) of the United States. Le Carré is not writing of ticking time bombs or plots to overthrow democracy, but of minor skirmishes behind the lines. Smiley turns a foreign agent, unmasks a mole, bolsters the Circus in the eyes of government ministers. He wins his battles; the Cold War grinds on.

Read in order, the novels recount an unrelenting sequence of moral compromise, betrayal, and murder. Smiley increasingly seems weighted by sadness—the sadness, perhaps, of a man who cannot shake his own doubt. Even when he triumphs over Karla, his brilliant Soviet adversary, in the 1979 novel Smiley’s People, he exits on an ambivalent, rather than triumphal note. So are the novels, in the end, tragedies—narratives of the private toll of service in a noble struggle? Or do they record an angrier and less exalted vision of suffering? What, in short, were all those deaths for?

Alec Guinness as George Smiley

Reviewers in the first half of le Carré’s career identified him as a conservative of a now-vanished kind: a man suspicious of wealth and bemused by ideology, whose writing is suffused with nostalgia for forms of British life that were already waning before Thatcher, privatization, and the bankers finished them off. More broadly, critics have described spy fiction as an inherently conservative genre, for the spy’s role is to guard the existing order against those who would subvert it.

But such readings can hardly account for the urgency, and occasional stridency, of le Carré’s post-Cold War novels. Whereas Smiley is uneasy about the intentions of the Circus’s CIA cousins, le Carré’s later protagonists express their fury at the injustice of an American-dominated global order. As Smiley himself admits in The Secret Pilgrim (1990), “the right side lost, but the wrong side won.”

Often, American intelligence officers serve as the literal villains of the post-Cold War books. In Absolute Friends (2003), a CIA officer turned private contractor invents a terrorist plot, and frames and murders a retired British agent, in a scheme to buttress German government support for George W. Bush’s “Global War on Terror.”

In 2008’s A Most Wanted Man, the CIA snatches an innocent Chechen refugee from the streets of Hamburg to interrogate (and presumably torture) him in a secret prison. Le Carré takes on gun-runners (The Night Manager, 1993), money launderers (Single & Single, 1999), pharmaceutical behemoths (The Constant Gardener, 2001), and Russian oligarchs (Agent Running in the Field, 2019). He writes against state violence and private greed. His protagonists assert a lonely humanism, before power sweeps them away.

Critics haven’t been as kind to these later novels. They are longer, less disciplined, and more polemical than the Cold War classics, the argument goes. Take Michiko Kakutani’s New York Times review of Absolute Friends. The novel is “ham-handed and didactic,” she writes, becoming, by its concluding sections, “a clumsy, hectoring, conspiracy-minded message-novel meant to drive home the argument that American imperialism poses a grave danger to the new world order.”

But is the critique fair? Le Carré’s description of CIA-style abductions, where massed operatives overwhelm their target, bind his hands, and dress him in black goggles and headphones engineered to block all sight and sound of the outside world, derives from contemporary journalistic accounts.

We know, from later reporting, that FBI informants baited vulnerable Americans into participating in concocted terrorist plots in the years after the September 11, 2001 attacks, that the NSA illegally sought to sweep up all domestic and foreign communications through dragnet surveillance, and that the CIA tortured and murdered suspected terrorists, including innocent men, at its black sites. Meanwhile the toll of civilian dead, from American or American-funded wars in Iraq and across the greater Middle East, now stretches into the many hundreds of thousands.

Under the present administration, the American government no longer even invokes the language of universal human rights. Our president threatens to take what he wants, including foreign territory and treasure, with brute force. It’s difficult to charge a writer with clumsiness or conspiratorial thinking when reality has since demonstrated that there is no lower limit to our degradation.

*

I’ve lived more than half my life in the United States, and English is my native language, but I’ve sometimes had the sense—while reading the news, or discussing politics or foreign affairs with friends—that others are speaking in tongues. Official propaganda, repeated often enough, infects both journalism and private speech. For years at a stretch, certain words and phrases disappear from polite discourse: “kidnapping,” “torture,” “murder,” “war crime,” “genocide.” New locutions— “extraordinary rendition,” “enhanced interrogation,” “targeting,” “collateral damage,” “precision strikes”—abruptly replace them. There are writers who participate in that public erasure, and others who stand against it. Le Carré belongs to the second group.

John le Carré receives his honorary doctorate from Oxford

Read him from the beginning and you discover a novelist who wrote himself toward ever more terrifying truths. His novels explore the moral squalor of all wars, justified or not. His most admirable characters rebel against the bureaucratic and corporate systems that wield human beings as tools. He is not an idealist—he knows these men and women are doomed—but an existentialist who dramatizes the necessity of individual struggle. He is a spy novelist for whom espionage itself becomes a social poison. Although he writes novels of the exterior, of historical event rather than human memory and desire, his plots reveal much about the state of our souls. His body of work, sixty years in the making, shows the world as it is.

https://lithub.com/a-writer-for-our-time-why-john-le-carres-work-remains-more-essential-than-ever/?utm_source=firefox-newtab-en-us

*

Virgin female prisoners should be raped before execution so that they do not go to heaven.” ~Ayatollah Khomeini (founding leader of the Islamic regime in Iran).

*

QUARTET FOR THE END OF TIME

On January 15, 1941, during the freezing winter at the Görlitz concentration camp, something very unusual happened: a group of Nazi officers quietly sat and listened to a concert performed by prisoners.

It all started in 1940 when Germany invaded France. Near the town of Nancy, they captured a medical worker and composer named Olivier Messiaen, along with two other soldier-musicians.

The three were sent to a camp in Germany—Görlitz—where a guard named Karl-Albert Brüll gave Messiaen an empty barrack where he could peacefully write music. There, he composed a unique piece inspired by the rhythm of war and time. It became known as The Quartet for the End of Time.

Later, Brüll even helped by faking documents so the musicians could escape. ~ Jackie Phipps, Quora

*

TRUMP’S CULTURE OF CORRUPTION

At the gala dinner President Trump held last month for those who bought the most Trump cryptocurrency, the champion spender was the entrepreneur Justin Sun, who had put down more than $40 million on $Trump coins. Mr. Sun had a good reason to hope that this investment would pay off. He previously invested $75 million in a different Trump crypto venture — and shortly after the Trump administration took office in January, the Securities and Exchange Commission paused its lawsuit against him on charges of cryptocurrency fraud.

The message seemed obvious enough: People who make Mr. Trump richer regularly receive favorable treatment from the government he runs.

The cryptocurrency industry is perhaps the starkest example of the culture of corruption in his second term. He and his relatives directly benefit from the sale of their cryptocurrency by receiving a cut of the investment. Even if the price of the coins later falls and investors lose money, the Trumps can continue to benefit by receiving a commission on future sales. Forbes magazine estimates that he made about $1 billion in cryptocurrency in the past nine months, about one-sixth of his net worth.

Only a few years ago, Mr. Trump was deeply skeptical of cryptocurrency, calling it “potentially a disaster waiting to happen” and comparing it to the “drug trade and other illegal activity.” Since he and his family have become major players in the market, however, his concerns have evidently disappeared. He shut down a Justice Department team that investigated illegal uses of cryptocurrency. He pardoned crypto executives who pleaded guilty to crimes, and his administration dropped federal investigations of crypto companies. He nullified an Internal Revenue Service rule that went after crypto users who didn’t pay their taxes.

The self-enrichment of the second Trump administration is different from old-fashioned corruption. There is no evidence that Mr. Trump has received direct bribes, nor is it clear that he has agreed to specific policy changes in exchange for cash. Nonetheless, he is presiding over a culture of corruption. He and his family have created several ways for people to enrich them — and government policy then changes in ways that benefit those who have helped the Trumps profit. Often Mr. Trump does not even try to hide the situation. As the historian Matthew Dallek recently put it, “Trump is the most brazenly corrupt national politician in modern times, and his openness about it is sui generis.” He is proud of his avarice, wearing it as a sign of success and savvy.

This culture is part of Mr. Trump’s larger efforts to weaken American democracy and turn the federal government into an extension of himself. He has pushed the interests of the American people to the side, in favor of his personal interests. His actions reduce an already shaky public faith in government. By using the power of the people for personal gain, he degrades that power for any other purpose. He stains the reputation of the United States, which has long stood out as a place where confidence in the rule of law fosters confidence in the economy and financial markets. This country was not previously known as an executive kleptocracy.

During Mr. Trump’s first term, he frequently used his powers to reward himself. He held government events at his hotels, and his family business continued to make deals involving foreign governments, in an apparent violation of the constitutional prohibition of enrichment from foreign leaders. But those erasures of ethical norms now look like a dress rehearsal for the ultimate production of the second term. Given the stakes, we believe that it is important to step back and document the range of self-dealing since he took office four months ago:

While administration officials engage in complex negotiations in the Middle East, Mr. Trump and his family are making billions of dollars’ worth of deals with players in the region. The Trump Organization has six real estate projects planned in Qatar, Saudi Arabia, Oman and the United Arab Emirates. The company struck a deal with the Qatari government to build a golf club and beachside villas that will bring in millions of dollars in fees. Mr. Trump announced recently that Qatar was donating a Boeing 747-8 worth about $200 million to serve as a more luxurious Air Force One, which he has said could go to his presidential library after he leaves office. For all this, he has made clear that tiny Qatar can expect a cozy relationship. “We are going to protect this country,” Mr. Trump said in Doha. “It’s a very special place, with a special royal family.”

During his first term, those currying favor with Mr. Trump bought drinks and dinner or spent the night at his Washington hotel. Now they can spend half a million dollars to join the private club Donald Trump Jr. is opening in Georgetown. It is called Executive Branch. The club’s founding members include Cameron and Tyler Winklevoss, twin brothers whose cryptocurrency company was being sued by the S.E.C. — until Mr. Trump’s administration put a hold on the lawsuit.

As his administration is negotiating with Vietnam to reduce the tariffs he imposed on the country’s goods, the government there is making way for a $1.5 billion golf complex outside Hanoi, as well as a Trump skyscraper in Ho Chi Minh City. Vietnamese officials said in a letter that the real estate project needed to be fast-tracked because it was “receiving special attention from the Trump administration and President Donald Trump personally.”

Serbian officials cleared the way for a Trump International Hotel in Belgrade by using a forged document to permit the demolition of a cultural site at the location. Serbian opposition leaders say the forgery demonstrates how eager the country’s government has been to do a deal benefiting Mr. Trump.

Mr. Trump has held meetings, including one in the Oval Office, to force a merger between the PGA Tour and the Saudi-backed LIV golf circuit, which frequently holds tournaments on Trump courses. In April, LIV Golf paid the Trump family to host a tournament at the Trump National Doral in Florida. A merger could lead to more such events.

The right-wing activist Elizabeth Fago attended a $1 million per person fund-raising dinner for MAGA Inc., Mr. Trump’s super PAC, in April. Less than three weeks later, The Times reported, he granted a full pardon to Ms. Fago’s son Paul Walczak, who pleaded guilty to tax crimes in 2024. The pardon is one of many issued by Mr. Trump to people who provided him with political or financial support or were associated with others who did.

After personally suing media companies that the government regulates, including CBS/Paramount and ABC/Disney, Mr. Trump has won millions of dollars in settlements. Paramount has reportedly offered $15 million to settle a baseless lawsuit Mr. Trump filed against CBS, fearing that the Trump administration would otherwise block its planned merger with Skydance Media. Mr. Trump is demanding $25 million.

Amazon has agreed to pay $40 million for the rights to a documentary about Melania Trump. That’s tens of millions more than such projects usually cost, Hollywood executives have said. Mrs. Trump’s cut is more than 70 percent. Defense contracts for web services would be reason enough for Amazon to curry favor with Mr. Trump.

Mr. Trump’s inaugural committee raised $239 million, mostly from large corporations and business leaders. The committee spent far less than that on the inauguration and faces few legal restrictions on what to do with the rest of the money. It is typical for presidents not to spend all of their inaugural funds, but previous presidents raised far less than Mr. Trump. The largest donor, a chicken processor called Pilgrim’s Pride, already seems to be benefiting from favorable government policies, The Wall Street Journal reported.

The motivation for people to give so much money to the president of the United States is plain. Some want him to bestow favors on them; others are trying to avoid being punished by his administration’s vindictive approach to governance. All of them want to shift government policy to benefit them, often at the expense of the American people.

In the case of his foreign benefactors, they may not use campaign contributions to do so because federal law prohibits foreigners from donating to election campaigns. There are no such restrictions, though, on Georgetown club memberships or crypto funds. The investors in the Trumps’ crypto company include the United Arab Emirates, which is investing $2 billion, and a small technology company with ties to China that is buying as much as $300 million worth of Mr. Trump’s memecoins.

What can be done about all this? Congressional Republicans probably have the greatest ability to influence his behavior, and they have been largely quiescent. It is quite a contrast with the past, when Republicans appropriately raised concerns about dubious Democratic behavior, such as Hunter Biden’s attempts to cash in on his family name and Bill Clinton’s efforts to reward top Democratic donors with nights in the Lincoln Bedroom or even a pardon. The Trumps’ behavior is far more egregious and voluminous.

Legal remedies also appear limited. Mr. Trump’s Justice Department and other agencies have made clear that they will not investigate his allies, let alone him and his family. Even long-term efforts to investigate corruption will be hobbled. The Supreme Court last year made it virtually impossible to hold a president criminally liable for actions even distantly related to his official duties, which may well include Mr. Trump’s self-enrichment. As for the people offering the tributes to Mr. Trump, he has made clear that he is willing to use his pardon power to protect his allies, regardless of the criminality of their behavior.

Sadly, that leaves voters as the remaining remedy. The best hope of holding Mr. Trump accountable for his culture of corruption involves calling attention to it and making his allies pay a political price for enabling it. Congressional Republicans clearly feel sheepish about aspects of it. A few of them, including Senator John Thune of South Dakota, the majority leader, and Senator Ted Cruz of Texas, expressed concerns about the Qatari airplane. So did Ben Shapiro, the conservative podcaster. They all recognize that corruption is a political vulnerability.

Some congressional Democrats have tried to focus attention on the situation. “Donald Trump wants to numb this country into believing that this is just how government works,” Senator Chris Murphy, Democrat of Connecticut, said in a recent floor speech. “That he’s owed this. That every president is owed this. That government has always been corrupt, and he’s just doing it out in the open.” But Democrats should do even more to highlight the ways that Mr. Trump is using powers that rightfully belong to the American people — the powers of the federal government — to benefit himself.

If Americans shrug this off as just “Trump being Trump,” his self-dealing will become accepted behavior. It will encourage other politicians to sell their offices. The damage will undermine our government, our society and even our economy. Historically, when corruption becomes the norm in a country, economic growth suffers, and living standards stagnate. Under Mr. Trump, the United States is sliding down that slope.

https://www.nytimes.com/2025/06/07/opinion/trump-corruption.html?unlocked_article_code=1.NE8.NOyL.7OYCRi8mnW9G&smid=fb-share

mag:

As noted by other readers, the Trump administration has taken grift and corruption to an unprecedented degree. He is a corrupt scammer, totally uninterested in governance in any form. Rather, he is focused on enriching himself and his family. Aided and abetted by the GOP Congress and members of the Supreme Court. Thanks to Trump and his enablers/sycophants, we are now a third rate country. What the country needs — and is crying out for — are solutions. Anyone can criticize. It takes much more to lead.

Waifdog:

One big problem is cynicism. I just had breakfast with two good friends who dismissed what I had to say by saying everybody does it. They expect politicians to be corrupt and cannot distinguish matters of degree. They also say they don’t believe anything in the news because they all lie. A convenient way to stay ignorant. These are smart people, one a lawyer and retired professor, the other a CPA. And both extremely religious. I am so disappointed.

Bob Guthrie:

Cryptocurrency is the ultimate Ponzi scheme. It produces nothing. Commonsense dictates we don't get things for nothing in life. When the pyramid scam falls let's hope it doesn't cause something like a great depression. It bespeaks well the something for nothing Trump mentality. It's not even at the level of quid pro quo... it's pianos for free.

Jason 98144:

The thing here is Trump is a perfect marriage of corruption and incompetence. Look at his inability to manage the relationship with his primary funder which in turn will undoubtedly turn off Silicon Valley wealth. If you can’t figure out how to take a bribe from Elon Musk that doesn’t say a lot about your competence in general. His government’s predictable incompetence in the upcoming hurricane season and in the face of any other disaster will bring this to a complete end. You can get away with corruption for a long time if you’re competent. It gets a lot harder to pull it off if you’re a fool.

Russ:

Having visited and lived in many countries across the world, I have come to the conclusion that the key quality separating poor countries from countries with widely distributed wealth is the degree of corruption of the government. A corrupt government siphons the country's wealth without providing public education, health, stable finances, and infrastructure that are the foundations of a successful society. In electing Trump. the American voters knowingly chose corruption and a dismantling of all of the elements that have made the US the world's most successful country for the past 80 years.

I suspect that the voters are unaware of the reasons that their grandparents were so determined to avoid the perils of poverty. Voters were drawn instead to recreate the idleness that they enjoyed during the pandemic that will surely come as our country heads into a long period of decline we become a former superpower. Having never experienced poverty, most voters will be in for a rude shock to learn that extreme poverty is anything but idle. The Roman Empire ruled the world until the Romans gave in to sloth and overwhelming corruption. We still have a small chance of rescuing our country but our chances decline each day we allow Trump's combination of corruption and dismantling of civil society to continue.

*

ARE ONLY CHILDREN REALLY MORE SELFISH?

Only children often face the stereotype of being more selfish than children born with siblings, supposedly because they do not have to compete for a parents' attention. Recent studies, however, have shown that this is not the case, and that growing up without siblings does not lead to increased selfishness or narcissism. Other research suggests that the social behaviors of only children compared to children with siblings are not large or pervasive, and "may grow smaller with age."

Birth order research has typically not included only children on the grounds that they cannot be fairly compared to children who have grown up with siblings. However, it is possible to compare the personality traits of siblings and only children, according to a 2025 paper by Michael Ashton, a professor of psychology at Brock University, Canada, and Kibeom Lee, a professor of psychology at the University of Calgary, Canada.'

Their study presented some new and fascinating results. It examined the association between personality, birth order, and number of siblings, in 700,000 adults online in one sample and more than 70,000 in another, separate sample. Middle-born and last-born siblings averaged higher on the "Honesty-Humility" and "Agreeableness" scales than first-born siblings.

"Honesty-Humility" measures how honest and humble a person is, meaning, a high-scoring person is unlikely to manipulate others, break rules, or feel entitled.

A low-scoring person may be more inclined to break rules and may feel a strong sense of self-importance. On the agreeableness scale, a high-scoring person tends to be forgiving, lenient in judging others, even-tempered and willing to compromise, while a low-scoring person may hold grudges, be stubborn, be quick to feel anger, and be critical of others.

"These differences were quite small in size, particularly when the comparisons involve people from families having the same number of children," Ashton and Lee say in an email. "In contrast, the differences in these dimensions between persons from a one-child family (i.e., only children) and persons from a six-or-more-child family were considerably larger, somewhere between the sizes that social scientists would call 'small' and 'medium'."

So, I ask, is the influence of birth order just a zombie theory – a concept that is wrong but which refuses to die? Rohrer disagrees. "I'm not sure whether I would call it a zombie theory," she says. "From the scientific perspective, I think the literature is progressing quite productively."

So we may, one day, have a clearer answer as to what it means to be an eldest daughter. Until then, I'll keep letting my younger sister believe I'm inherently smarter than her.

https://www.bbc.com/future/article/20250619-does-birth-order-shape-your-personality?at_objective=awareness&at_ptr_type=email&at_email_send_date=20250625&at_send_id=4393374&at_link_title=https%3a%2f%2fwww.bbc.com%2ffuture%2farticle%2f20250619-does-birth-order-shape-your-personality&at_bbc_team=crm

*

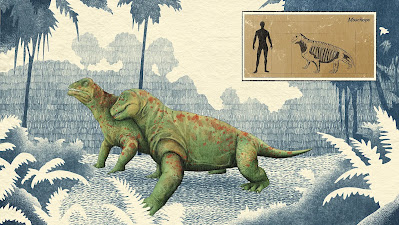

TERRIFYING PERMIAN MEGAFAUNA

Long before T. rex, the Earth was dominated by super-carnivores stranger and more terrifying than anything dreamed up by Hollywood.

The two animals circled each other, both assessing their rival's robust, hairless body. With sabre-teeth like steak knives, piercing claws and skin as thick as a rhino's, they snapped their jaws open nearly 90 degrees – and launched into battle. From the right-hand side of one animal, the other's teeth crunched down from above. In a split second, it was over. Sinking its five-inch (12.7cm) canines into its opponent's boxy snout, like hot needles through wax, the attacker claimed victory. This actually happened – or something like it.

Around a quarter of a billion years later, on a sunny day in March 2021, Julien Benoit was handed a rather unpromising container and invited to take a look. He was working in a pleasantly cool office at Iziko Museum of Natural History in Cape Town, South Africa, where he had been invited to visit the university's fossil collections. The vessel was a very old, simple cardboard box.

"It hadn't been opened for at least 30 years," says Benoit, an associate professor of evolutionary studies at the University of the Witwatersrand, Johannesburg. Inside was a jumble of bones, including countless skulls, many of which had been mislabeled. As he was sorting through and re-classifying them – assigning them to long-extinct species – he noticed a small, shiny surface.

"It was an exciting moment. I immediately knew what I was looking at," says Benoit. With a wide smile, he went to visit his colleague and asked to borrow her microscope to take a closer look. The shiny surface belonged to a tooth. This one was pointy and rounded, and it was embedded in the skull of another animal, probably a member of the same species. Benoit believes that two wolf-sized individuals had been fighting for dominance before one of their smaller teeth snapped off.

But this wasn't a tooth from any dinosaur. It was an artefact of a long-forgotten world – one immortalized in stone long before T. rex, Spinosaurus or Velociraptor made their debut. The skull belonged to an unidentified species of gorgonopsian – a group of slick apex predators which stalked the Earth around 250 to 260 million years ago, chasing down large prey and ripping off chunks of their flesh to swallow whole.

This was the Permian, an obscure era of geological history where the planet was ruled by giant, bone-chilling beasts that ran with a characteristic waddle and sometimes snacked on sharks. During this living nightmare, there were occasionally more carnivores around than there were prey for them to eat on land.

A strange world

The Permian began some 299 to 251 million years ago, when all the land on Earth had coalesced into a single, rabbit-shaped lump – the supercontinent Pangaea – surrounded by a vast, global ocean called Panthalassa.

This was an era of extremes. It opened with an ice age that turned the southern half of the continent into a continuous block of ice and locked up so much water, global sea levels dropped by up to 120m (394ft). Once this was over, the supercontinent gradually warmed up and dried out. With such an expanse of continuous land, the interior did not benefit from the cooling or moistening effects of the ocean, creating swathes of wasteland. By the middle Permian, central Pangaea was mostly desert scattered with conifers, punctuated by the occasional flood. Parts were nearly uninhabitable, sometimes experiencing air temperatures of up to 73C (163F) – hot enough to slow-roast a turkey.

Permian herbivores

"So quite a lot of aridity, but nonetheless wetter down the edges, and certainly in the northern and southern hemispheres, there was plenty of vegetation," says Paul Wignall, professor of paleoenvironments at the University of Leeds in the UK.

Then towards the end of the Permian, the entire planet abruptly heated up by about 10C (60F) – roughly double the worst case scenario today if greenhouse gas emissions continue to rise unchecked. This set the scene for the largest mass extinction in Earth's history, and the conditions in which the dinosaurs would come to thrive.

Permian extinction

But during this era, the evolution of T. rex was still some way away. In fact, most of the iconic dinosaurs we're familiar with today were about as close to existing in the Permian as we are to their time now. Instead, the largest land animals were the synapsids – a peculiar group with a kaleidoscopic array of body shapes and features, from the newt-like Cotylorhynchus, with an oddly tiny head and the mass of a small moose, to the goofy Estemmenosuchus, reminiscent of a hippo wearing a lumpy papier-mâché party hat.

The synapsids shared their world with a variety of other eccentric wildlife. The skies were ruled by dragonfly-like insects, Meganeuropsis, the size of ducks.

The largest insect that ever lived was Meganeuropsis permiana, a giant predatory relative of modern dragonflies. It had a wingspan of up to 75 cm (2.5 feet) and a body length reaching 47 cm (18.5 inches). Meganeuropsis permiana lived during the late Permian era, roughly 299 to 252 million years ago. Fossils have been found in North America, particularly in Kansas. This "griffinfly" was a fearsome predator, capable of taking down small vertebrates like amphibians and mammals. The decline of giant insects is linked to falling atmospheric oxygen levels, which limited insect respiratory efficiency, and climate changes, affecting ecosystems where these insects thrived.Meganeuropsis fossil

In fresh water, there were 33ft (10m)-long carnivorous amphibians to contend with – their long, snapping snouts resembling those of crocodiles. Meanwhile, the oceans were patrolled by mysterious shark-like fish with serrated circular "saws" attached to their mouths. It's thought that Helicoprion used their brutal apparatus to slice open the shells of ammonites and cut through the bodies of large, fast-moving prey.

"I mean, there were so many weird and wacky creatures… I think it just highlights what a vibrant time this was," says Suresh Singh, a visiting research fellow in the School of Earth Sciences at the University of Bristol in the UK. Indeed, this was the first time that four-legged animals had mastered living entirely on land. Before the Permian was the Age of Amphibians, when most species were still tied to water for at least part of their lives, Singh explains.

But synapsids had a major advantage over amphibians – they could incubate their young within their own bodies, or lay large eggs that retained their own moisture. They essentially had their own portable "private pond," so they no longer needed lakes or rivers to reproduce.

The group also developed waterproofing on their bodies, so they could live in a wide variety of environments. While some of the first synapsids had scales, others are thought to have had tough, naked skin. In general, they were slow-moving, cold-blooded animals – but they still found a way to get their claws on their favorite meal: meat.

Pioneers of terror

Back in the Permian, the synapsids were utterly unlike anything which had come before. And one of the features that really set them apart from the competition was their mouthfuls of teeth. Whether an animal's diet called for crushing, chewing, tearing or snipping-off chunks of food – often flesh – these beasts were well-equipped for the task. Rather than just having many similar-shaped teeth like their ancestors, they had a whole Swiss Army knife in their mouths, from incisors to canines.

"So, the herbivores are eating loads of different plants that provide more nutrients," says Singh. This allowed them to grow larger bodies which, in turn, meant more calories for carnivores – allowing them to become giants. "Synapsids got big really quickly," says Singh. Soon Pangaea was swarming with predators.

Enter Dimetrodon, the Permian's answer to the Komodo dragon. These animals were three-and-a-half times bigger than their modern counterparts, weighing up to 250kg (551lbs) and somewhat more imposing – with tall, radiating "sails" that ran along the entire lengths of their backs. These apex predators swaggered around the swampier parts of Pangaea for tens of millions of years, eating anything they could get their teeth into – from small reptiles and amphibians to titanic, barrel-bodied synapsids like Cotylorhynchus.

At one site in Texas, paleontologists found that there were 8.5 times more Dimetrodon than there were large prey animals – a ratio suggesting a radical overabundance of predators, compared to what you might expect based on modern food chains. (In a private game reserve in South Africa today, for example, a typical lioness might kill around 16 large prey animals per year.)

This mysterious so-called "meat shortage" on land was solved, however, when scientists discovered the sail-backed predator's teeth mingled with the skeletons of Xenacanthus sharks. Dimetrodon had been filling the gaps in its diet by hunting the jumbo freshwater fish – and vice versa. Nearby the Xenacanthus remains, the researchers found Dimetrodon bones which had been chewed up by Xenacanthus.

Like other early synapsids, Dimetrodon would have had a splayed walk reminiscent of that of crocodiles

But one feature of Dimetrodon has left scientists pondering for centuries: what were the spiny "sails" on their backs for? In 1886, the luxuriously moustachioed paleontologist Edward Drinker Cope suggested that a similar feature on a close relative of the genus might have acted as a series of literal sails, like those on a boat. Cope speculated that the animals used their sails to cruise around lakes, catching the wind. However, Cope was no stranger to a spectacular blunder – and he was wrong.

The next idea was that Dimetrodon's sail acted like a solar panel, helping the animals to warm up quickly so that they could chase down their prey. Alas, the laws of physics put that theory down too. Using Dimetrodon's size to estimate its typical metabolic rate, researchers calculated that their sails would have been useless for thermoregulation in smaller members of the group, which nevertheless still invested heavily in building these elaborate structures. In fact, the sails could have put some species of Dimetrodon at risk of hypothermia, by radiating heat away from the body. Instead, it's thought that they played a role in courtship, helping the monsters to entice mates.

As the Permian progressed, so did Dimetrodon's gastronomic tastes. While initially they tended to hunt prey that were smaller or the same size as them, eventually they graduated to more ambitious feasts – tackling increasingly larger prey. And here again, teeth were everything: later Dimetrodon had serrated and curved teeth, ideal for gripping and tearing flesh from prey that couldn't be swallowed whole. They could also replace their teeth if they got lost or broken – a major advantage if you're shearing off tough chunks of meat.

But despite their serrated teeth, Dimetrodon never quite honed all the equipment necessary for efficiently capitalizing on the new abundance of very large prey, says Singh. What Permian super-carnivores really needed, he explains, were wider jaws. This would create more room for muscles to attach, allowing for a more powerful bite.

And this left a gap in the market. Other flesh-eaters were more than happy to fill it.

Slick predators

The largest predator of the Permian was Anteosaurus. Like the mutant offspring of a tiger and a hippo, they grew to around 6m (19.7ft) long, with an appetite to match. "It's quite a prize [when you excavate one], because you don't find a lot of them," says Benoit. With muscular jaws, powerful arms and tough, bone-crunching teeth, these dominant carnivores reigned across Pangaea around 260 to 265 million years ago.

To add to their spine-chilling look, and frame their massive teeth, Anteosaurus had ridges of bone on their skulls above the eye sockets, evoking the ears of a big cat. "They would have been super scary to look at… it's the closest thing you have to a T. rex in the Permian," says Benoit. "The head, in general, is very well designed for killing large animals and crushing their bones," he says.

Benoit thinks of Anteosaurus as the cheetah of its day

The predators were also surprisingly fast. In 2021, Benoit and colleagues looked at the inner ear of Anteosaurus in detail, squeezing the skull of a young teenager into a CT scanner. The region is often fine-tuned for balance in agile hunters, and the researchers found that this specimen's was radically different from that of other synapsids. He compares the predator's unique adaptations to those of cheetahs or the Velociraptor. "It's very, very special," he says. "It's very well developed."

The team also found features in the brain which indicated that Anteosaurus had an impressive ability to stabilize its gaze. "So that means, when it locked on a prey, it would not stop following it," says Benoit.

But the supremacy of Anteosaurus turned out to be short lived – they vanished in a mass extinction around 260 million years ago. Soon it was the time of the gorgonopsians, the mightiest of which were Inostrancevia.

With saber-fangs and skulls up to 70cm (2.3ft) long, Inostrancevia were fast, dynamic hunters in the mold of polar bears. "If you include the root, you're looking at teeth in the neighborhood of 20-30cm long (8-12in)," says Christian Kammerer, a research curator in palaeontology at the North Carolina Museum of Natural Sciences. There's very little evidence of what their skin would have looked like, but based on scraps of fossilized skin from other synapsids, he thinks they most likely had a thick, rhino-like hide.

To be eaten by one of these Permian predators would have been an abrupt, gruesome affair.

Like Benoit's gorgonopsian skull, Inostrancevia have been found in the Karoo basin, a fossil site in South Africa just south of the Kalahari desert which has yielded thousands of fossils from the Permian.

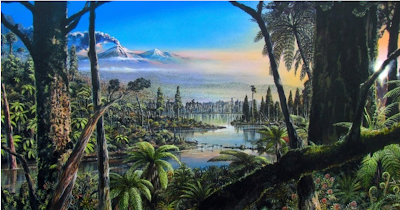

Today the Karoo is a swathe of dry, open plains the size of Germany, known as the "land of thirst". But 250 million years ago, the region was relatively lush – centered around an inland sea fed by a network of rivers. "There would have been ferns and horsetails and early sorts of Gymnosperms like pine trees, gingkos. At this point in time, there were no flowering plants, so no flowers, no grass of any kind," says Kammerer.

In this prehistoric environment, large prey were abundant. Huge herds of dicynodonts – hippo-like herbivores with beaks similar to those of tortoises – roamed the landscape alongside colossal, heavily armored reptiles known as pareiasaurs. The first sign of danger for these ambling plant-eaters was probably an Inostrancevia leaping out of a thicket or from behind a hill, says Kammerer. Based on their bodily proportions, he thinks they were likely to have been ambush predators.

After a short chase, Kammerer suggests that Inostrancevia may have subdued its prey with its forelimbs and gone for the kill with its powerful jaws and saber-teeth – possibly using them for a bit of disemboweling. Then they would rip off chunks of flesh and swallow them whole. "They were incapable of chewing," says Kammerer.

Inostrancevia had an 'indeterminate' growth pattern, like tortoises – they just kept getting bigger as long as they lived

Inostrancevia could afford to be a bit careless. Unlike the saber-toothed cats which inhabited the world much more recently and possibly overlapped with modern humans, Inostrancevia could easily replace broken or lost teeth, like sharks and many reptiles do. "[Fossilized] saber-toothed cats that are found with broken fangs are often inferred to have died of starvation as a result of that," says Kammerer.

However, for all their adaptations as pro-level hunters, Kammerer believes that the very presence of Inostrancevia in South Africa was an ominous sign – one which foreshadowed the greatest mass extinction in Earth's history. Because as it happens, they should never have been there at all.

Until recently, the only Inostrancevia ever discovered had been found in Russia, which even back in the Permian would have been on the other side of the world to the Karoo – separated by a 7,000-mile (11,265km) trek across the inhospitable center of Pangaea. Instead, South Africa was thought to have been exclusively populated with other, smaller gorgonopsians, like Benoit's face-biters.

Then around a decade ago, a fossil collector stumbled upon an Inostrancevia in the Karoo. Kammerer was intrigued. "I immediately thought, how is this here?" he says.

A Great Dying

Today a clue remains in the form of the Siberian traps, a region spanning around 5 million sq km (1.9 million sq miles), made entirely of basaltic rock. The area was formed at the end of the Permian, during a period of intense volcanic activity which spewed out 10 trillion tons of lava.

This is thought to have increased the levels of carbon dioxide in the Earth's atmosphere to around 8,000 parts per million (ppm), compared to roughly 425ppm today. Before long, the global temperature had spiked dramatically, disappearing thousands of species on land and in the oceans. During the Great Dying, or the Permian-Triassic mass extinction, around 90% of all life became extinct.

"So we think that the world got incredibly hot, probably the hottest it's been for the last billion years," says Wignall. Not only did this make survival difficult on land, but it was particularly catastrophic for aquatic life. "The effect of a really hot planet was to sort of slow down or stagnate the oceans, and so they basically lost their oxygen over a large part of the water column. Without any oxygen dissolved in the water, things die off," he says.

But unlike in the movies, this end-of-world event did not happen instantly. "I think when people are thinking about mass extinctions, we often go to the one that wiped out the dinosaurs… an asteroid hits the earth, vaporizes everything around it, and then kicks up a dust cloud – and there's effectively nuclear winter for a long time," says Kammerer. The Permian extinction, on the other hand, unfolded over hundreds of thousands of years, he explains.

Now it turns out that the gorgonopsians which had originally inhabited the Karoo went quietly extinct long before the Great Dying reached its peak. Inostrancevia simply crossed Pangaea to fill the predator-sized hole that they had left behind. In the Karoo basin, Kammerer points out that ecosystems were becoming destabilized well before the main extinction pulse. Predators were going extinct, and being swiftly replaced by others. And he thinks this holds a lesson for us today: we're further along in the extinction crisis than we might care to admit.

"One example of what we've already seen is here in North America, historically, we had quite a large contingent of sort of top predatory mammals, so bears, pumas and wolves," says Kammerer. Now, in their absence, formerly mid-tier predators such as coyotes are becoming dominant. "They're aggressively expanding their ranges, living in a lot of areas where they didn't before and taking over the de facto role of top predator," he says.

In the end, even Inostrancevia didn't make it – they vanished 251 million years ago, along with all other gorgonopsians and the vast majority of their synapsid relatives. But a handful of species did manage to cling on to existence, living to terrorize the wildlife of the Triassic.

Today, synapsid predators are still with us. Eventually, some of the survivors from the Permian extinction evolved their own central heating, fur and the ability to nourish their offspring with milk: the strange monsters of the Permian are the ancestors of all mammals alive today, including humans.

https://www.bbc.com/future/article/20250624-the-bloodcurdling-permian-monsters-that-ruled-the-earth-before-dinosaurs

*

IT’S TIME TO STOP PURSUING HAPPINESS

Like many teenagers, I was once plagued with angst and dissatisfaction – feelings that my parents often met with bemusement rather than sympathy. They were already in their 50s, and, having grown up in postwar Britain, they struggled to understand the sources of my discontentment at the turn of the 21st century.

“The problem with your generation is that you always expect to be happy,” my mother once said. I was baffled. Surely happiness was the purpose of living, and we should strive to achieve it at every opportunity? I simply wasn’t prepared to accept my melancholy as something that was beyond my control.

The ever-growing mass of wellness literature would seem to suggest that many others share my view. As a writer covering the latest research, however, I have noticed a shift in thinking, and I am now coming to the conclusion that my mother’s judgment was spot on. Over the past 10 years, numerous studies have shown that our obsession with happiness and high personal confidence may be making us less content with our lives, and less effective at reaching our actual goals. Indeed, we may often be happier when we stop focusing on happiness altogether.

Let’s first consider the counterintuitive ways that the conscious pursuit of happiness can influence our mood, starting with a study by Iris Mauss at the University of California, Berkeley. The participants were first asked to rate how much they agreed with a series of statements such as: “I value things in life only to the extent that they influence my personal happiness” and “I am concerned about my happiness even when I feel happy.”

The people who scored high should have been seizing each day for its last drop of joy, yet Mauss found they tended to be less satisfied with their everyday lives, and were more likely to have depressive symptoms even in times of relatively low stress.

Various factors may have caused that link, of course, but a second study suggested a strong causal connection. In this experiment, Mauss asked half the participants to read a paragraph expounding the benefits of feeling good, and then had them watch a feelgood film about a professional figure skater. Far from enhancing their enjoyment of the inspirational story, the focus on their own happiness had muted their joy – compared with the second group of participants, who had been given a dry article to read about the importance of rational judgment.

These findings have now been replicated many times, with many more experiments revealing a dark side to the pursuit of happiness. As well as reducing everyday contentment, the constant desire to feel happier can make people feel more lonely. We become so absorbed in our own wellbeing, we forget the people around us – and may even resent them for inadvertently bringing down our mood or distracting us from more “important” goals.

The pursuit of happiness can even have strange effects on our perceptions of time, as the constant “fear of missing out” reminds us just how short our lives are and how much time we must spend on less than thrilling activities. In 2018, researchers at the University of Toronto found that simply encouraging people to feel happier while watching a relatively boring film meant that they were more likely to endorse the statement “time is slipping away from me.”

The same was true when the participants were asked to list 10 activities that might contribute to their happiness: the reminder of all that they could be doing to improve their wellbeing placed them in a kind of panic, as they recognized how little time they had to achieve it all.

Perhaps most important, paying constant attention to our mood can stop us from enjoying everyday pleasures. Surveying participants in the UK, Dr Bahram Mahmoodi Kahriz and Dr Julia Vogt at the University of Reading have found that the people who scored highest on Mauss’s questionnaire felt less excitement and anticipation for forthcoming events, and were less likely to savor the moment during the events themselves. They were also less likely to look back fondly on a fun event in the days afterwards – it just occupied less of their headspace.

“They have such a high standard for achieving happiness that they don’t appreciate the small and simple things that are really meaningful in their life – and they are more unhappy as a result,” says Mahmoodi Kahriz.

These lessons may be especially important in the pandemic. The peaks in our mood may be few and far between, but a simple appreciation of the small pleasures amid the stress could help ease us through the day-to-day anxieties, Mahmoodi Kahriz says. That will be much harder for people who are constantly thinking about their happiness, since they’ll always be lamenting the loss of the many more exciting activities that they could have been doing.

The law of repulsion

If the general pursuit of happiness is problematic, specific strategies designed to bring about greater contentment can also backfire.

Consider the oft-cited technique of “visualizing your success”. A student might imagine themselves in mortar board and gown; an athlete with a gold medal around their neck; someone on a diet might picture the new clothes they’ll be wearing at the end of their regime.

The idea lies behind bestselling books such as The Power of Positive Thinking by Norman Vincent Peale and often features in inspirational biographies. It seems to make sense that thoughts of success could boost our motivation and self-confidence. What’s wrong with imagining a better future for yourself?

Quite a lot, according to research by Prof Gabriele Oettingen and colleagues at New York University, which has shown that this intuition is counterproductive. One of her first studies found that dieters who spend some time imagining their newer, healthier figure tend to lose less weight than dieters who do not engage in such fantasies. Similarly, students who daydream about their future jobs are less likely to gain employment after university than students who don’t contemplate their successes in such vivid detail.

The researchers suspect that the positive fantasies – and the positive moods that they create – can lead to a sense of complacency. “You feel good about the future, with no urgency to act,” says Dr Sandra Wittleder, a postdoctoral fellow at NYU. This process could be seen at play in a recent study tracking students’ progress over the course of two months: the more they reported fantasizing about their success, the less time they spent studying for their exams – presumably because, at an unconscious level, they assumed they were already well on the way to getting a good grade. Inevitably, they performed worse overall.

Not only do these fantasies reduce the chances of success, the failures pack an even greater emotive punch once you compare your previous hopes with your current circumstances. Echoing Mauss’s research on the pursuit of happiness, Oettingen’s team found that the students who had engaged in this kind of positive thinking suffered a greater number of depressive symptoms months down the line.

If you really want to succeed, you’d do far better to engage in “mental contrasting”, which involves combining your fantasies of success with a deliberate analysis of the obstacles in your path and the frustrations you are likely to face. Someone going on a diet, for example, might think about the benefits for their health before considering the temptation of junk food, and the ways it could stop you from reaching that goal. By contemplating these potential failures, they may not feel so good in the short term, but many studies have shown that this simple practice can increase motivation and improve success in the long run. “It creates a kind of tension or excitement,” says Wittleder, who has shown that the method can help dieters to avoid temptation and eat more healthily.

Black and white thinking

These unexpected effects should give pause for thought to anyone striving for even greater contentment – a topic that will be on many people’s minds as a new year begins. If we go about it in the wrong way, an overambitious set of resolutions will only set us up for stress, disappointment and loneliness.

Rather than making an elaborate list of life changes, we should aim for fewer, more realistic goals, and be aware that even some apparently benign habits are best used sparingly. You will have heard that keeping a “gratitude journal” – in which you regularly count your blessings – can increase your overall wellbeing, for example. Yet research shows that we can overdose on this. In one study, people who counted their blessings once a week showed the expected rise in life satisfaction, but those who counted their blessings three times a week actually became less satisfied with their life. “Doing the activity can itself feel like a chore, rather than something you actually enjoy,” says Dr Megan Fritz at the University of Pittsburgh, who recently reviewed the conflicting evidence for various happiness interventions.

You should also reset your expectations of the path ahead. While greater contentment is achievable, don’t expect miracles, and accept that no matter how hard you try, feelings of frustration and unhappiness will appear from time to time. In reality, certain negative feelings can serve a useful purpose. When we feel sad, it’s often because we have learned something painful but important, while stress can motivate you to make some changes to your life.

Simply recognizing the purpose of these emotions, and accepting them as an inevitable part of life, may help you to cope better than constantly trying to make them disappear. Any effort that we make – whether it’s specifically aiming at greater happiness, or other measures of success – will come with some challenges and disappointments, and the last thing you should do is blame yourself for occasionally feeling bad when plans don’t work out.

Ultimately, you might adopt the old adage “Prepare for the worst, hope for the best, and be unsurprised by everything in between.” As my mother tried to teach me all those years ago, ease the pressure off yourself, and you may just find that contentment arrives when you’re least expecting it.

*

THE BIBLE AND THE QUESTION OF HUMAN AND ANIMAL SACRIFICE

In places the bible seems to clearly teach that the biblical god Yahweh demanded innocent blood in the form of animal sacrifices.

But as we will see, seven Hebrew prophets strongly disagreed, saying god did NOT want sacrifices and two of them, Jeremiah and Amos, accused the Levites of changing the bible to benefit themselves.

Why?

Because the Levites got to eat the best cuts of meat without working like everyone else.

The prophet Jeremiah said the “lying pen” of the Levite scribes had changed the torah YWH, which can be interpreted as both the Law of the Lord and the Bible of God or Word of God.

How can you say, “We are wise, for we have the law of the Lord,” when actually the lying pen of the scribes has handled it falsely? (Jeremiah 8:8 NIV)

Jeremiah denied, point-blank, that god ordained animal sacrifices during the Exodus, when the Ten Commandments were given to Moses:

Thus saith Yahweh of hosts, God of Israel: “Add your burnt-offerings to your sacrifices, and eat the flesh. For in the day that I brought your ancestors out of the land of Egypt, I did NOT speak to them or command them concerning burnt-offerings and sacrifices. (Jeremiah 7:22)

BTW, what Jeremiah said here blatantly contradicts the idea that god demanded the “sacrifice” of Jesus.

The prophet Amos agreed with Jeremiah that deceitful Levite scribes had changed the Bible to demand animal sacrifices, which allowed them to eat the best cuts of meat without working like everyone else.

Amos asked ironically and rhetorically:

“Did you [indeed] bring me sacrifices and offerings the forty years in the wilderness, O house of Israel?” (Amos 5:25)

DID JESUS HAVE TO DIE IN ORDER FOR SINS TO BE FORGIVEN?

Yes, according to christian theology.

No, according to the oldest gospel, Mark, which does not portray Jesus' death as a sacrifice for sin. Mark portrays Jesus pardoning sins during his life on earth, without any sacrifice (Mark 2:1-12).

The gospel of John even says Jesus authorized his disciples to forgive sins, before his death (John 20:23).

Luke 5:17-26 has Jesus not only saying that he could forgive sins before the crucifixion, but that it was easier for him to forgive sins than to heal people. Luke 7:36-50 has Jesus forgiving the sins of a woman who anointed his feet. Luke 23:39-43 says Jesus forgave the sins of the thief on the cross, while Jesus was still alive. But there is another contradiction here, because the other gospels say both thieves reviled Jesus and neither one was saved.

So which is it?

*

Who can offer sacrifices to god?

The Levites said only they could offer sacrifices, and only in the tabernacle. This was an “an ordinance forever.” (Numbers 18:6, Leviticus 17:1-5)

And yet Samuel, who was not a Levite, but of the tribe of Judah, offered sacrifices to god at Mizpeh and the bible says god heard him. (1 Samuel 7:7-9)

However, seven biblical prophets denied that Yahweh desired bloody sacrifices, and in often blistering terms: Amos, Hosea, Isaiah, Jeremiah, Micah, Samuel and King David. (Amos 5:21-25, Hosea 6:6, Isaiah 1:11-15, Jeremiah 7:22, 14:12, Micah 6:6-8, 1 Samuel 15:22, Psalm 40:6, 51:16) Jesus quoted two of these prophets specifically on the subject, and he quoted Hosea 6:6 while he was cleansing the temple.

Two prophets, Jeremiah and Amos, said god did not command animal sacrifices at the time of Moses and accused the Levites of changing the bible to benefit themselves. Why? Because the Levites got to eat the best cuts of meat without working like everyone else.

Does god want human sacrifices?

No!

Well, at least children should not be sacrificed to other gods. (Leviticus 18:21, Leviticus 20:2, Deuteronomy 12:31)

Yes, when the god is Yahweh!

Jesus is the sacrifice god always wanted, according to christian theology. (1 Corinthians 5:7, 1 Peter 1:18-19, Hebrews 9:13-22, Hebrews 10:10)

Yahweh commanded Israelites to give him their firstborn sons.

Thou shalt not delay to offer the first of thy ripe fruits, and of thy liquors: the firstborn of thy sons shalt thou give unto me. (Exodus 22:29)

Yahweh said every "devoted thing ... both of man and beast ... shall surely be put to death.”

No devoted thing, that a man shall devote unto the LORD of all that he hath, both of man and beast ... shall be sold or redeemed: every devoted thing is most holy unto the LORD. None devoted, which shall be devoted of men, shall be redeemed; but shall surely be put to death. (Leviticus 27:28-29)

The Levites apparently went back on this when they realized they couldn’t eat human sacrifices or unclean animals. So they revised the “no redemption” thing in Numbers 18:15-16 so that firstborn children and unclean animals could be redeemed for — ta-da! — cold cash paid to the Levites.

Moses commanded his commanders to kill every Midianite male and non-virgin female, but to keep the virgin girls alive for themselves, except for one per thousand, who were to be given to god as “booty” and “tribute.” (Numbers 31:25-40) The commanders found 32,000 virgins, 32 of whom became God's “share” and 16 of whom were sacrificed as a "heave offering." [The term "heave" refers to the act of lifting or raising the offering, symbolizing its dedication to God.]

The “Spirit of the Lord” inspired Jephthah to sacrifice whoever came out to greet him first when he returned from slaughtering Ammonites. When his daughter greeted him, Jephthah kept his vow by killing her as a burnt offering to god. (Judges 11:29-40)

In order to end a famine David sacrificed two of Saul's sons and five of his grandsons and hung them up "unto the Lord." The five grandsons of Saul were by David’s wife Michal, the daughter of Saul; were they David’s own children? (2 Samuel 21:1, 8-14)

Josiah "did that which was right in the eyes of the Lord" when he killed "all the priests of the high places" and burnt their bones upon their altars. (1 Kings 13:1-2, 2 Kings 23:20, 2 Chronicles 34:1-5)

God told Abraham to sacrifice his son Isaac as a burnt offering. (Genesis 22:2)

Human Sacrifice

“… Thou shalt not let any of thy seed pass through the fire to Molech, neither shalt thou profane the name of thy God…” — Leviticus 18:21

And yet:

The Bible says its god demanded the sacrifice of his only son, Jesus, who was human. The Bible says Jesus went into the fires of hell to preach to the wicked from the time of Noah. The Bible equates Gehenna, where children were sacrificed to the fires of Moloch, with hell — then says its god did the same thing, in the same place!

Jephthah, who led the Israelites against the Ammonites, sought to guarantee victory by making a devilish deal with God: “If thou shalt without fail deliver the children of Ammon into mine hands, Then it shall be, that whatsoever cometh forth of the doors of my house to meet me, when I return in peace from the children of Ammon, shall surely be the LORD’s, and I will offer it up for a burnt offering.” — Judges 11:30-31

These terms were acceptable to god — who is omniscient and knows the future — so he gave the victory to Jephthah, and the first person who greeted him upon his return was his daughter, as God knew it would happen. True to his vow, Jephthah sacrificed his daughter to god, who didn’t bother to stop him. — Judges 11:29-34

Sacrifice in General

The Bible also disagrees on sacrifices in general. Christians believe sins can only be forgiven through the “sacrifice” of Jesus. In some verses the Bible claims its god lusted after the “sweet savor” of burning flesh like a ravenous animal or a drunk at a barbeque. But six biblical prophets said god did not want, much less demand sacrifices, and Jesus quoted two of them.

For instance, Jesus said:

“But go and learn what this means: 'I desire mercy, not sacrifice.' For I have not come to call the righteous, but sinners.” (Matthew 9:13)

Jesus was quoting the prophet Hosea in Hosea 6:6.

The prophet Jeremiah denied that god ordained animal sacrifices during the Exodus, when the Ten Commandments were given to Moses:

Thus saith Yahweh of hosts, god of Israel: “Add your burnt-offerings to your sacrifices, and eat the flesh. For in the day that I brought your ancestors out of the land of Egypt, I did not speak to them or command them concerning burnt-offerings and sacrifices.. (Jeremiah 7:22)

What Jeremiah said blatantly contradicts the idea that god demanded the purported “sacrifice” of Jesus.

Jeremiah directly accused the Levite scribes of changing the Torah:

How do ye say, We are wise, and the law of the LORD is with us? Lo, certainly in vain made he it; the pen of the scribes is in vain. (Jeremiah 8:8 KJV)

The NIV translates the same accusation more accurately:

How can you say, “We are wise, for we have the law of the LORD,” when actually the lying pen of the scribes has handled it falsely? (Jeremiah 8:8 NIV)

The prophet Amos agreed with Jeremiah that the deceitful Levite scribes had changed the Bible to demand animal sacrifices, which allowed them to eat the best cuts of meat without working like everyone else. Amos asks ironically and rhetorically: “Did you [indeed] bring me sacrifices and offerings the forty years in the wilderness, O house of Israel?” (Amos 5:25)

Amos also said god despised the festivals at which animals were slaughtered and burnt as “sacrifices,” not to god, but to feed the Levites: "I hate, I reject your feasts, and I will not smell your meat-offerings in your general assemblies."

Amos completely rejected the idea that burnt offerings were a “sweet savor” to god.

The prophet Micah also denied the need for sacrifices, including that of firstborns, which would include Jesus not being a sacrifice:

1 Hear ye now what the Lord saith; Arise, contend thou before the mountains, and let the hills hear thy voice. 2 Hear ye, O mountains, the Lord's controversy, and ye strong foundations of the earth: for the Lord hath a controversy with his people, and he will plead with Israel. 3 O my people, what have I done unto thee? and wherein have I wearied thee? testify against me. … Wherewith shall I come before the Lord, and bow myself before the high God? shall I come before him with burnt offerings, with calves of a year old? 7 Will the Lord be pleased with thousands of rams, or with ten thousands of rivers of oil? Shall I give my firstborn for my transgression, the fruit of my body for the sin of my soul? 8 He hath shewed thee, O man, what is good; and what doth the Lord require of thee, but to do justly, and to love mercy, and to walk humbly with thy God? (Micah 6:1-8)

The prophet Isaiah also denied that god had ever desired the flesh and blood of animals.

“But whoever sacrifices a bull is like one who kills a person, and whoever offers a lamb is like one who breaks a dog’s neck; whoever makes a grain offering is like one who presents pig’s blood, and whoever burns memorial incense is like one who worships an idol. They have chosen their own ways, and they delight in their abominations.” (Isaiah 66:3)

Isaiah equates sacrifices with murder, idolatry and offering something unclean (pig’s blood) to god.

“What are all your sacrifices to Me?” asks the Lord. “I have had enough of burnt offerings and rams and the fat of well-fed cattle; I have no desire for the blood of bulls, lambs, or male goats. (Isaiah 1:11 HCSB)

Long before the time of Jesus, King David believed in salvation by grace, claiming that God did not require sacrifice and could simply choose not to impute sin. (Psalm 32:1-2)

1 Samuel 15:22 says that "to obey is better than sacrifice, and to hearken than the fat of rams."

The prophets were pointing out that “sacrifices” were not the commandment of god, but rather were to benefit the Levites, who had changed the Bible to enrich themselves. And the same is true of Christian churches that reap enormous profits by peddling the “sacrifice” of Jesus to the gullible.

I could go on and on, but you get the picture.

DID NOAH KNOW ABOUT CLEAN AND UNCLEAN ANIMALS?

Two different accounts of the Great Flood were merged into one. In one version, Noah was instructed to take seven of each clean animal in order to make sacrifices (but there were no clean or unclean animals until the time of Moses, making this a scribal fraud). In the other version, only two of each species were taken aboard the ark and Noah made no sacrifices. Thus we end up with a comical mess:

(1) God tells Noah to take two of each kind of animal on the ark.

(2) God tells Noah to take seven of each clean kind of animal, allowing lots of bloody sacrifices later.

(3) Noah takes two of each kind of animal, not seven.

(4) Noah sacrifices animals, meaning he made extinct those he sacrificed. Is this why we have no dinosaurs?

(5) God smells the “sweet savor” of all those roasting dinosaurs and decides not to destroy the earth by water again. He creates the rainbow to commemorate this vow, while not telling poor Noah about his “divine plan” to destroy the earth by fire in the future.

(6) But then we’re back to the original author, who knew nothing about “unclean animals,” so we hear god say, “Every moving thing that liveth shall be meat for you.” (Genesis 9:3)

So it’s okay to eat shrimp and pork after all. ~ Michael R. Burch, Quora

Glenn White:

Priesthoods obviously invented sacrifice. Prometheus tricks Zeus into accepting fat, bones and gristle so man can eat the meat. In Genesis sacrifice is essentially the religion.

The animal sacrifice of Abel is acceptable but Cain's crops aren't. That's something Aten or an Egyptian god of farmers would accept. The sacrifice reflects the culture of the god.

Human sacrifice is more mysterious. Is it older than animal sacrifice? Was animal sacrifice instituted to replace human sacrifice like the story of Abraham and Issac indicates? It was never replaced in Mexico perhaps because no animals were deemed worthy.

Richard:

The incident at 2 Sam 21 where David offers 5 sons of Micah appears to be a scribal error. The following phrase mention that the children were Adriel’s and not David’s. Thus the woman should be Merab.

Very convenient that the defeated tribe would have David kill all his potential rivals.

The Sacrifice of Cain and Abel. Mariotto Albertinelli

*

RAPAMYCIN MAY PROLONG LIFE AT A LEVEL SIMILAR TO CALORIE RESTRICTION

A recent meta-analysis published in Aging Cell explored how rapamycin and metformin influenced longevity among several animals.

The results confirmed that dietary restriction appears to prolong life and that rapamycin offers similar benefits.

The two medications that were the focus of this analysis were rapamycin and metformin.

According to the National Cancer Institute, rapamycin has a few functions, such as being an immunosuppressant and antibiotic, and it can help people who get transplants.

This analysis involved a systematic literature search to find relevant data. The final analysis included data from 167 papers looking at eight total vertebrate species, seeking to see how both medications affected longevity and how they compared to dietary restrictions.

Researchers extracted information on average and median lifespan from the published papers.

For this analysis, the two types of dietary restriction were caloric reduction and fasting, and researchers also sought to see if the results differed based on the sex of the animals involved.

The data came from animals like mice, rats, turquoise killifish, and rhesus macaques. Overall, there were more males studied than females. There was also the most data on dietary restriction, and the most common type of dietary restriction was decreasing the number of calories.

Regarding dietary restriction, the findings suggested great variation regarding the effects.

Overall, researchers found that dietary restriction and rapamycin had a similar impact and appeared to contribute to prolonged life. Metformin appeared to only have a minimal impact on life extension.

Aside from one metformin model, there appeared to be no consistent differences between male and female animals regarding longevity.

This research analyzed animal data but did not include data about people. Additionally, most of these studies involved these animals in a laboratory setting and only looked at a small number of species.

This meta-analysis was also the work of only three researchers, sometimes with only one researcher doing a component of the work, which could have impacted the results.

Researchers had the least amount of data on metformin, so more research about this medication might be helpful.

They also operated under the assumption that if a paper did not specify male or female subjects, it was a mixed group, which could have been incorrect.

The results show a potential benefit of rapamycin that warrants more research.

https://www.medicalnewstoday.com/articles/rapamycin-may-extend-lifespan-as-effectively-as-dietary-restrictions

Oriana:

Just as berberine has benefits similar to those of metformin (except better: it also dramatically improves the lipid profile), so curcumin appears to be an analog of rapamycin.

*

LONGEVITY AND ANTI-AGING EFFECTS OF CURCUMIN

Aging is a gradual and irreversible process that is accompanied by an overall decline in cellular function and a significant increase in the risk of age-associated disorders. Generally, delaying aging is a more effective method than treating diseases associated with aging. Currently, researchers are focused on natural compounds and their therapeutic and health benefits. Curcumin is the main active substance that is present in turmeric, a spice that is made up of the roots and rhizomes of the Curcuma longa plant. Curcumin demonstrated a positive impact on slowing down the aging process by postponing age-related changes. This compound may have anti-aging properties by changing levels of proteins involved in the aging process, such as sirtuins and AMPK, and inhibiting pro-aging proteins, such as NF-κB and mTOR.